FORCAST Redux Developer’s Manual¶

Introduction¶

Document Purpose¶

This document is intended to provide all the information necessary to maintain the FORCAST Redux pipeline, used to produce Level 2, 3, and 4 reduced products for FORCAST imaging and grism data, in either manual or automatic mode. Level 2 is defined as data that has been processed to correct for instrumental effects; Level 3 is defined as flux-calibrated data; Level 4 is any higher data product. A more general introduction to the data reduction procedure and the scientific justification of the algorithms is available in the FORCAST Redux User’s Manual.

This manual applies to FORCAST Redux version 2.7.0.

Redux Revision History¶

FORCAST Redux was developed as five separate software packages: DRIP, which provides the FORCAST-specific processing algorithms; PypeUtils, which provides general-purpose scientific algorithms; PySpextool, which provides spectroscopic data reduction algorithms; PypeCal, which provides photometry and flux calibration algorithms; and Redux, which provides the interactive GUI, the automatic pipeline wrapper, and the supporting structure to call the FORCAST algorithms.

DRIP is a Python translation of an IDL package developed in IDL by Dr. Luke Keller and Dr. Marc Berthoud for the reduction of FORCAST data. Version 1.0.0 was originally released for use at SOFIA in July 2013. DRIP originally contained a set of imaging reduction functions, an object-oriented structure for calling these functions, an automatic pipeline, and an interactive GUI for manual reductions. The package also supported spectroscopic reductions with an interface developed in parallel to the DRIP interface, called FG (FORCAST Grism). DRIP v2.0.0 was developed in 2019 and 2020, by Daniel Perera and Melanie Clarke, as a full reimplementation in Python. This version translates only the imaging algorithms from DRIP, replacing the other functionality with PySpextool algorithms and Redux structures.

PypeUtils was developed as a shared code base for SOFIA Python pipelines, primarily by Daniel Perera for USRA/SOFIA. It contains any algorithms or utilities likely to be of general use for data reduction pipelines. From this package, the FORCAST pipeline uses some FITS handling utilities, multiprocessing tools, image manipulation algorithms, and interpolation and resampling functions.

Like DRIP, PySpextool is a translation of an earlier SOFIA IDL library, called FSpextool. FSpextool was built on top of a pre-release version of Spextool 4, an IDL-based package developed by Dr. Michael Cushing and Dr. William Vacca for the reduction of data from the SpeX instrument on the NASA Infrared Telescope Facility (IRTF). Spextool was originally released in October 2000, and has undergone a number of major and minor revisions since then. The last stable public release was v4.1, released January 2016. As Spextool does not natively support automatic command-line processing, FSpextool for SOFIA adapted the Spextool library to the SOFIA architecture and instruments; version 1.0.0 was originally released for use at SOFIA in July 2013. PySpextool is a Python translation of the core algorithms in this package, developed by Daniel Perera and Melanie Clarke, and first released at SOFIA for use in the FIFI-LS pipeline in October 2019.

PypeCal is a Python translation of the IDL PipeCal package, developed by the SOFIA DPS team, to provide photometry and flux calibration algorithms that may be used to calibrate imaging data from any instrument, given appropriate reference data. It was originally developed by Dr. Miguel Charcos-Llorens and Dr. William Vacca as a set of IDL and shell scripts that were run independently of the Level 2 pipeline, then was refactored by Melanie Clarke for incorporation into the Redux pipeline. It was first incorporated into FORCAST Redux in version 1.0.4, which was released for use in May 2015. PypeCal is a full translation of this package, developed by Dr. Rachel Vander Vliet and Melanie Clarke, and first released for use at SOFIA with the HAWC+ pipeline in February 2019.

Redux was originally developed to be a general-purpose interface to IDL data reduction algorithms. It provided an interactive GUI and an object-oriented structure for calling data reduction processes, but it did not provide its own data reduction algorithms. It was developed by Melanie Clarke, for the SOFIA DPS team, to provide a consistent front-end to the data reduction pipelines for multiple instruments and modes, including FORCAST. It was redesigned and reimplemented in Python, with similar functionality, to support Python pipelines for SOFIA. The first release of the IDL version was in December 2013; the Python version was first released to support the HAWC+ pipeline in September 2018.

In 2020, all SOFIA pipeline packages were unified into a single package,

called sofia_redux. The interface package (Redux) was renamed to

sofia_redux.pipeline, PypeUtils was renamed to sofia_redux.toolkit,

PypeCal was renamed to sofia_redux.calibration,

PySpextool was renamed to sofia_redux.spectroscopy, and the DRIP

package was renamed to sofia_redux.instruments.forcast. An additional

package, to support data visualization, was added as

sofia_redux.visualization.

Overview of Software Structure¶

The sofia_redux package has several sub-modules organized by functionality:

sofia_redux

├── calibration

├── instruments

│ ├── exes

│ ├── fifi_ls

│ ├── flitecam

│ ├── forcast

│ └── hawc

├── pipeline

├── scan

├── spectroscopy

├── toolkit

└── visualization

The modules used in the FORCAST pipeline are described below.

sofia_redux.instruments.forcast¶

The sofia_redux.instruments.forcast package is written in Python using

standard scientific tools and libraries.

The data reduction algorithms used by the pipeline are straight-forward functions that generally take a data array, corresponding to a single image file, as an argument and return the processed image array as a result. They generally also take as secondary input a variance array to process alongside the image, a header array to track metadata, and keyword parameters to specify non-default settings. Parameters for these functions may also be provided via a configuration file packaged along with the software (dripconf.txt) that gets read into a global variable. Redux manipulates this global variable as necessary in order to provide non-default parameters to the data processing functions.

The forcast module also stores any reference data needed by the FORCAST

pipeline, in either imaging or grism mode. This includes bad pixel masks,

nonlinearity coefficients, pinhole masks for imaging distortion correction,

wavelength calibration files, atmospheric transmission spectra, spectral

standard models, and instrument response spectra. The default files may

vary by date; these defaults are managed by the getcalpath function

in the forcast module. New date configurations may be added to the

caldefault.txt files in forcast/data/caldefault.txt and

forcast/data/grism/caldefault.txt.

sofia_redux.toolkit¶

sofia_redux.toolkit is a repository for classes and functions of general usefulness,

intended to support multiple SOFIA pipelines. It contains several submodules,

for interpolation, image manipulation, multiprocessing support, numerical calculations, and

FITS handling. Most utilities are simple functions that take input

as arguments and return output values. Some more complicated functionality

is implemented in several related classes; see the sofia_redux.toolkit.resampling

documentation for more information.

sofia_redux.spectroscopy¶

The sofia_redux.spectroscopy package contains a library of general-purpose

spectroscopic functions. The FORCAST pipeline uses these algorithms

for spectroscopic image rectification, aperture identification, and

spectral extraction and calibration. Most of these algorithms are simple

functions that take spectroscopic data as input and return processed data

as output. However, the input and output values may be more complex than the

image processing algorithms in the forcast package. The Redux interface

in the pipeline package manages the input and output requirements for

FORCAST data and calls each function individually. See the

sofia_redux.spectroscopy API documentation for more information.

sofia_redux.calibration¶

The sofia_redux.calibration package contains flux calibration algorithms

used by Redux perform photometric or flux calibration calculations on

input images and return their results. The complexity of this package is

primarily in the organization of the reference data contained in the

data directory. This directory contains a set of calibration data for

each supported instrument (currently FORCAST and HAWC+). For each

instrument, the configuration files are split into groups based on how

often they are expected to change, as follows.

In the filter_def directory, there is a file that defines the mean/reference wavelength, pivot wavelength, and color leak correction factor for each filter name (SPECTEL). This may vary by date only if the filters change, but keep the same SPECTEL name. The color leak factor is currently 1.0 for all filters (no color correction).

In the response directory, there are response fit files that define the fit coefficients for each filter, with a separate file for the altitude fit, airmass fit, and PWV fit for each of single/dual modes. These should also change rarely.

In the ref_calfctr directory, there are files that define the average reference calibration factors to apply to science objects, by filter, along with the associated error value. It is expected that there will be a different ref_calfctr file for each flight series, produced by an analysis of all standards taken throughout the flight series.

In the standard_flux directory, there is a file that defines the flux model characteristics for each object: the percent error on the model, and the scale factor to apply to the model. The model error is 5% for all stars except BetaPeg (which is 9.43%), and is 20% for all asteroids. The scale factor is usually 1.0, with the exception of BetaUmi, which requires scaling by 1.18 from the model output files. This file should change rarely, if ever, except to add objects to it. Currently, the same file is used for all data. Also in the standard flux directory, there are output files from the standard models for each object, for each applicable date if necessary. From these files, the lambda_mean column is read and compared to the mean wavelength in the filter_def file. If found, the corresponding value in the Fnu_mean column is used as the standard model flux. If there is a scale defined in the model_err file, it is applied to the flux value. These files should rarely change, but new ones will be added for asteroids any time they are observed. They may need to be redone if the filter wavelengths change.

To manage all these files, there is a top-level calibration configuration file (caldefault.txt), which contains the filenames for the filter definition file, the model error file, the reference cal factor file, and the response fit files, organized by date and filter. This table will most likely be updated once per series, when we have generated the reference calibration factors. There is also a standards configuration file (stddefault.txt) that identifies the model flux file to use by date and mode (single/dual). For stars, the date is set to 99999999, meaning that the models can be used for any date; asteroids may have multiple entries - one for each date they are observed. This file must be updated whenever there are new asteroid flux models to add, but it should be as simple as dropping the model file in the right directory and adding a line to the table to identify it.

sofia_redux.visualization¶

The sofia_redux.visualization package contains plotting and display

routines, relating to visualizing SOFIA data. For the FORCAST pipeline,

this package currently provides an interactive spectral viewer and a module

that supports generating quick-look preview images.

sofia_redux.pipeline¶

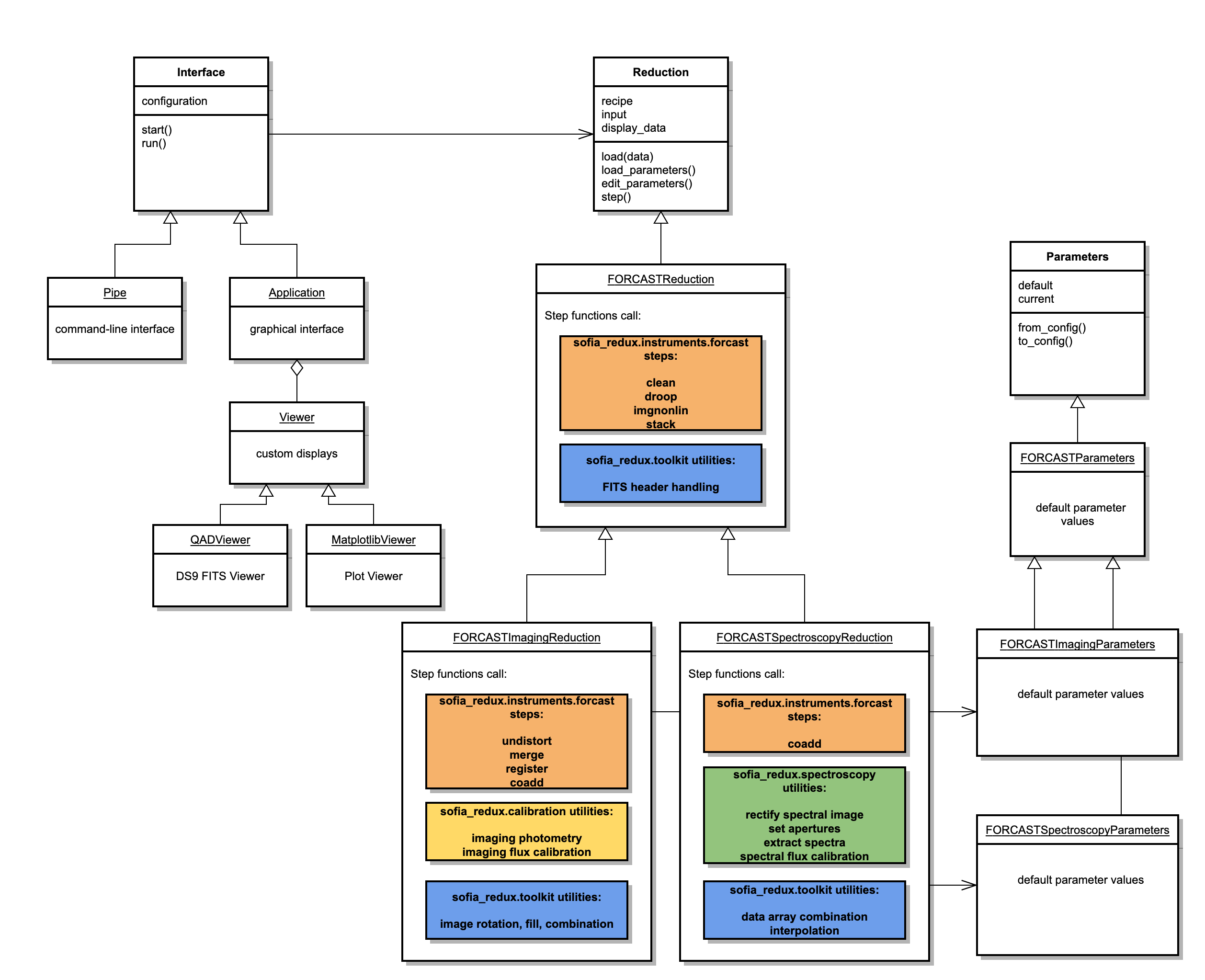

Design¶

Redux is designed to be a light-weight interface to data reduction pipelines. It contains the definitions of how reduction algorithms should be called for any given instrument, mode, or pipeline, in either a command-line interface (CLI) or graphical user interface (GUI) mode, but it does not contain the reduction algorithms themselves.

Redux is organized around the principle that

any data reduction procedure can be accomplished by running a linear

sequence of data reduction steps. It relies on a Reduction class that

defines what these steps are and in which order they should be run

(the reduction “recipe”). Reductions have an associated Parameter

class that defines what parameters the steps may accept. Because

reduction classes share common interaction methods, they can be

instantiated and called from a completely generic front-end GUI,

which provides the capability to load in raw data files, and then:

set the parameters for a reduction step,

run the step on all input data,

display the results of the processing,

and repeat this process for every step in sequence to complete the

reduction on the loaded data. In order to choose the correct

reduction object for a given data set, the interface uses a Chooser

class, which reads header information from loaded input files and

uses it to decide which reduction object to instantiate and return.

The GUI is a PyQt application, based around the Application

class. Because the GUI operations are completely separate from the

reduction operations, the automatic pipeline script is simply a wrapper

around a reduction object: the Pipe class uses the Chooser to

instantiate the Reduction, then calls its reduce method, which calls

each reduction step in order and reports any output files generated.

Both the Application and Pipe classes inherit from a common Interface

class that holds reduction objects and defines the methods for

interacting with them. The Application class additionally may

start and update custom data viewers associated with the

data reduction; these should inherit from the Redux Viewer class.

All reduction classes inherit from the generic Reduction class,

which defines the common interface for all reductions: how parameters

are initialized and modified, how each step is called.

Each specific reduction class must then define

each data reduction step as a method that calls the appropriate

algorithm.

The reduction methods may contain any code necessary to accomplish the data reduction step. Typically, a reduction method will contain code to fetch the parameters for the method from the object’s associated Parameters class, then will call an external data reduction algorithm with appropriate parameter values, and store the results in the ‘input’ attribute to be available for the next processing step. If processing results in data that can be displayed, it should be placed in the ‘display_data’ attribute, in a format that can be recognized by the associated Viewers. The Redux GUI checks this attribute at the end of each data reduction step and displays the contents via the Viewer’s ‘display’ method.

Parameters for data reduction are stored as a list of ParameterSet

objects, one for each reduction step. Parameter sets contain the key,

value, data type, and widget type information for every parameter.

A Parameters class may generate these parameter sets by

defining a default dictionary that associates step names with parameter

lists that define these values. This dictionary may be defined directly

in the Parameters class, or may be read in from an external configuration

file or software package, as appropriate for the reduction.

FORCAST Redux¶

To interface to the FORCAST pipeline algorithms, Redux defines

the FORCASTReduction, FORCASTImagingReduction, and

FORCASTSpectroscopyReduction as primary reduction classes, with associated

parameter classes FORCASTParameters, FORCASTImagingParameters, and

FORCASTSpectroscopyParameters. [1]

See Fig. 115 for a sketch of

the Redux classes used by the FORCAST pipeline.

The FORCASTReduction class holds definitions for algorithms applicable to

both imaging and spectroscopy data:

Check Headers: calls

sofia_redux.instruments.forcast.hdcheckClean Images: calls

sofia_redux.instruments.forcast.cleanandsofia_redux.instruments.forcast.check_readout_shiftCorrect Droop: calls

sofia_redux.instruments.forcast.droopCorrect Nonlinearity: calls

sofia_redux.instruments.forcast.imgnonlinandsofia_redux.instruments.forcast.backgroundStack Chops/Nods: calls

sofia_redux.instruments.forcast.stack

The FORCASTImagingReduction class inherits from the FORCASTReduction

class and additionally defines imaging-specific algorithms:

Correct Distortion: calls

sofia_redux.instruments.forcast.undistortMerge Chops/Nods: calls

sofia_redux.instruments.forcast.mergeRegister Images: calls

sofia_redux.instruments.forcast.register_datasetsTelluric Correct: calls

sofia_redux.calibration.pipecal_util.apply_tellcorandsofia_redux.calibration.pipecal_util.run_photometryCoadd: calls

sofia_redux.toolkit.image.coaddFlux Calibrate: calls

sofia_redux.calibration.pipecal_util.apply_fluxcalandsofia_redux.calibration.pipecal_util.run_photometryMake Image Map: calls

sofia_redux.visualization.quicklook.make_image

The FORCASTSpectroscopyReduction class inherits from the FORCASTReduction

class and additionally defines spectroscopy-specific algorithms:

Stack Dithers: calls

sofia_redux.toolkit.image.combine_imagesMake Profiles: calls

sofia_redux.spectroscopy.rectifyandsofia_redux.spectroscopy.mkspatprofLocate Apertures: calls

sofia_redux.spectroscopy.findaperturesTrace Continuum: calls

sofia_redux.spectroscopy.tracespecSet Apertures: calls

sofia_redux.spectroscopy.getaperturesandsofia_redux.spectroscopy.mkapmaskSubtract Background: calls

sofia_redux.spectroscopy.extspec.col_subbgExtract Spectra: calls

sofia_redux.spectroscopy.extspec.extspecMerge Apertures: calls

sofia_redux.spectroscopy.getspecscaleandsofia_redux.toolkit.image.combine_imagesCalibrate Flux: calls

sofia_redux.instruments.forcast.getatranandsofia_redux.spectroscopy.fluxcalCombine Spectra: calls

sofia_redux.toolkit.image.coaddsofia_redux.instruments.forcast.register_datasets.get_shifts, andsofia_redux.toolkit.image.combine_imagesMake Response: calls

sofia_redux.instruments.forcast.getmodelCombine Response: calls

sofia_redux.toolkit.image.combine_imagesMake Spectral Map: calls

sofia_redux.visualization.quicklook.make_image

The recipe attribute for the reduction class specifies the above steps, in the order given, with general FORCAST algorithms preceding the imaging or spectroscopy ones.

If an intermediate file is loaded, its product type is identified from the PRODTYPE keyword in its header, and the prodtype_map attribute is used to identify the next step in the recipe. This allows reductions to be picked up at any point, from a saved intermediate file. For more information on the scientific goals and methods used in each step, see the FORCAST Redux User’s Manual.

The FORCAST reduction classes also contains several helper functions, that

assist in reading and writing files on disk, and identifying which

data to display in the interactive GUI. Display is performed via

the QADViewer class provided by the Redux package. Spectroscopic diagnostic

data is additionally displayed by the MatplotlibViewer class.

Fig. 115 FORCAST Redux class diagram.¶

Detailed Algorithm Information¶

The following sections list detailed information on the functions and procedures most likely to be of interest to the developer.

sofia_redux.instruments.forcast¶

sofia_redux.instruments.forcast.background Module¶

Functions¶

|

Calculate the background of the image |

|

Return the most common data point from discrete or nominal data |

sofia_redux.instruments.forcast.calcvar Module¶

Functions¶

|

Calculate read and poisson noise of the variance from raw FORCAST images |

sofia_redux.instruments.forcast.check_readout_shift Module¶

Functions¶

|

Check data for 16 pixel shift |

sofia_redux.instruments.forcast.chopnod_properties Module¶

Functions¶

|

Returns the chop nod properties in the detector reference frame |

sofia_redux.instruments.forcast.clean Module¶

Functions¶

|

Replaces bad pixels in an image with approximate values |

sofia_redux.instruments.forcast.configuration Module¶

DRIP configuration

Functions¶

|

Load the DRIP configuration file |

sofia_redux.instruments.forcast.distcorr_model Module¶

Functions¶

|

Read the pinhole file and return a dataframe |

|

Generate the pinhole model from paramters. |

|

View the pinhole model and optionally write to FITS file. |

|

Generate model array of pin holes base on input file |

sofia_redux.instruments.forcast.droop Module¶

Functions¶

|

Corrects droop electronic signal |

sofia_redux.instruments.forcast.getatran Module¶

Functions¶

Clear all data from the atran cache. |

|

|

Retrieves atmospheric transmission data from the atran cache. |

|

Store atran data in the atran cache. |

|

Retrieve reference atmospheric transmission data. |

sofia_redux.instruments.forcast.getcalpath Module¶

Functions¶

|

Return the path of the ancillary files used for the pipeline. |

sofia_redux.instruments.forcast.getdetchan Module¶

Functions¶

|

Retrieve DETCHAN keyword value from header as either SW or LW |

sofia_redux.instruments.forcast.getmodel Module¶

Functions¶

Clear all data from the model cache. |

|

|

Retrieves model data from the model cache. |

|

Store model data in the model cache. |

|

Retrieve reference standard model data. |

sofia_redux.instruments.forcast.getpar Module¶

Functions¶

|

Get a header or configuration parameter |

sofia_redux.instruments.forcast.hdcheck Module¶

Functions¶

|

Return if a keyword header value meets a condition |

|

Checks the AND/OR conditions of keyword definitions is met by header |

|

Check if a header of a FITS file matches a keyword requirement |

|

Validate all keywords in a header against the keywords table |

|

Validate filename header against keywords table |

|

Checks file headers against validity criteria |

sofia_redux.instruments.forcast.hdmerge Module¶

Functions¶

|

Merge input headers. |

sofia_redux.instruments.forcast.hdrequirements Module¶

Functions¶

|

Parse a condition value defined in the keyword table |

|

Returns a dataframe containing the header requirements |

sofia_redux.instruments.forcast.imgnonlin Module¶

Functions¶

|

Return the signal level from the header |

|

Read header and determine camera |

|

Get non-linearity scale from the header based on camera and capacitance |

|

Get non-linearity coefficients from the header based on camera and capacitance |

|

Get non-linearity coefficient limits from the header based on camera and capacitance |

|

Corrects for non-linearity in detector response due to general background. |

sofia_redux.instruments.forcast.imgshift_header Module¶

Functions¶

|

Calculates the shift_image in the pixel frame for merging an image |

sofia_redux.instruments.forcast.jbclean Module¶

Functions¶

|

Remove jailbars with FFT |

|

Remove jailbars using the median of correlated columns |

|

Removes "jailbar" artifacts from images |

sofia_redux.instruments.forcast.merge Module¶

Functions¶

|

Merge positive and negative instances of the source in the images |

sofia_redux.instruments.forcast.merge_centroid Module¶

Functions¶

|

Merge an image using a centroid algorithm |

sofia_redux.instruments.forcast.merge_correlation Module¶

Functions¶

|

Merge an image using a correlation algorithm |

sofia_redux.instruments.forcast.merge_shift Module¶

Functions¶

|

Merge an image by shifting the input data by the input values |

sofia_redux.instruments.forcast.peakfind Module¶

Functions¶

|

Find peaks (stars) in FORCAST images |

Classes¶

|

Configure and run peak finding algorithm. |

sofia_redux.instruments.forcast.read_section Module¶

Functions¶

|

Read the section in the configuration file and check if it's correct |

sofia_redux.instruments.forcast.readfits Module¶

Functions¶

|

Add an id or file name to a header as PARENTn |

|

Returns the array from the input file |

sofia_redux.instruments.forcast.readmode Module¶

Functions¶

|

Read the chop/nod and instrument mode from the header |

sofia_redux.instruments.forcast.register Module¶

Functions¶

|

Shift an image for coadding using a centroid algorithm |

|

Shift an image for coaddition using a correlation algorithm |

|

Shift an image for coaddition using header information |

|

Shift an image for coaddition using header information |

|

Use dither data to shift_image input image to a reference image. |

sofia_redux.instruments.forcast.register_datasets Module¶

Functions¶

|

Return the pixel offset of a point on header relative to refheader |

|

Returns all shifts relative to the reference set. |

|

Expands an array to a new shape |

|

Shifts an individual data set |

|

Shifts datasets onto common frame |

|

Resize all datasets to the same shape |

|

Registers multiple sets of data to the same frame |

sofia_redux.instruments.forcast.rotate Module¶

Functions¶

|

Rotate an image by the specified amount |

|

Rotate the coordinates about a center point by a given angle. |

|

Rotate a single (y, x) point about a given center. |

|

Rotate an image about a point by a given angle. |

|

Rotate an image, using a mask for interpolation and edge corrections. |

sofia_redux.instruments.forcast.shift Module¶

Functions¶

|

Shift an image by the specified amount |

sofia_redux.instruments.forcast.stack Module¶

Functions¶

|

Add data frames together at the same stack (position) |

|

Return frame scale levels |

|

Run the stacking algorithm on C2NC2 data |

|

Run the stacking algorithm on MAP (Mapping mode) data |

|

Run the stacking algorithm on C3D data (3 position chop with dither) |

|

Run the stacking algorithm on CM data (multi-position chop) |

|

Run the stacking algorithm on STARE data |

|

Convert data to mega-electrons per seconds |

|

Remove background from data |

|

Subtracts chop/nod pairs to remove astronomical/telescope background |

sofia_redux.instruments.forcast.undistort Module¶

Functions¶

Default values of the model and instrument points to be warped and fitted. |

|

|

Get the pinhole model and coefficients and update header. |

|

Calculate the position of x0, y0 after a transformation |

|

Update the WCS in the header according to the transform |

|

Transform an image and update header using coordinte point mapping |

|

Rebin the image to square pixels |

|

Frame an image in the center of a new image and add border |

|

Find single peak in image and update SRCPOS in header |

|

Corrects distortion due to camera optics. |

sofia_redux.toolkit¶

sofia_redux.toolkit.convolve.base Module¶

Classes¶

|

Convolution class allowing error propagation. |

Class Inheritance Diagram¶

digraph inheritance555cb6bce0 { bgcolor=transparent; rankdir=LR; size=""; "ConvolveBase" [URL="../../../api/sofia_redux.toolkit.convolve.base.ConvolveBase.html#sofia_redux.toolkit.convolve.base.ConvolveBase",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Convolution class allowing error propagation."]; "Model" -> "ConvolveBase" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "Model" [URL="../../../api/sofia_redux.toolkit.utilities.base.Model.html#sofia_redux.toolkit.utilities.base.Model",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Base model Class for fitting N-dimensional data"]; }sofia_redux.toolkit.convolve.kernel Module¶

Functions¶

|

Apply a kernel over multiple features |

|

Convolve an N-dimensional array with a user defined kernel or fixed box. |

|

Apply a least-squares (Savitzky-Golay) polynomial filter |

Classes¶

|

Generic convolution with a kernel |

|

Convolution with a box kernel (mean) |

|

Convolve using Savitzky-Golay filter |

Class Inheritance Diagram¶

digraph inheritance7459f88665 { bgcolor=transparent; rankdir=LR; size=""; "BoxConvolve" [URL="../../../api/sofia_redux.toolkit.convolve.kernel.BoxConvolve.html#sofia_redux.toolkit.convolve.kernel.BoxConvolve",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Convolution with a box kernel (mean)"]; "KernelConvolve" -> "BoxConvolve" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "ConvolveBase" [URL="../../../api/sofia_redux.toolkit.convolve.base.ConvolveBase.html#sofia_redux.toolkit.convolve.base.ConvolveBase",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Convolution class allowing error propagation."]; "Model" -> "ConvolveBase" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "KernelConvolve" [URL="../../../api/sofia_redux.toolkit.convolve.kernel.KernelConvolve.html#sofia_redux.toolkit.convolve.kernel.KernelConvolve",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Generic convolution with a kernel"]; "ConvolveBase" -> "KernelConvolve" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "Model" [URL="../../../api/sofia_redux.toolkit.utilities.base.Model.html#sofia_redux.toolkit.utilities.base.Model",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Base model Class for fitting N-dimensional data"]; "SavgolConvolve" [URL="../../../api/sofia_redux.toolkit.convolve.kernel.SavgolConvolve.html#sofia_redux.toolkit.convolve.kernel.SavgolConvolve",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Convolve using Savitzky-Golay filter"]; "ConvolveBase" -> "SavgolConvolve" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; }sofia_redux.toolkit.convolve.filter Module¶

Functions¶

|

Apply Savitzky-Golay filter to an array of arbitrary features |

|

Creates the correct windows for given order and samples |

|

Edge enhancement Sobel filter for n-dimensional images. |

sofia_redux.toolkit.fitting.fitpeaks1d Module¶

Functions¶

|

Simple convenience lookup to get width parameter name for various models. |

|

Creates the object fitting a model to data |

|

A simple wrapper to fit model to the data |

|

Convolve a model with a box (or another model) |

|

Create the |

|

Perform an initial search for peaks in the data |

|

Return a background model with initialized parameters |

|

Refine the initial fit and return a set of models |

|

Fit peaks (and optionally background) to a 1D set of data. |

|

Default data preparation for |

|

Default peak guess function for |

sofia_redux.toolkit.fitting.polynomial Module¶

Functions¶

|

Returns exponents for given polynomial orders in arbitrary dimensions. |

|

Create a system of linear equations to solve n-D polynomials |

|

Create a system of linear equations |

|

Linear equation solution by Gauss-Jordan elimination and matrix inversion |

|

Evalulate polynomial coefficients at x |

|

Evaluate a polynomial in multiple features |

|

Calculate the zeroth order polynomial coefficients and covariance |

|

Fit a polynomial to data samples using linear least-squares. |

|

Fit a polynomial to data samples using Gauss-Jordan elimination. |

|

Solve for polynomial coefficients using non-linear least squares fit |

|

Fits polynomial coefficients to N-dimensional data. |

|

|

|

|

|

|

|

Least squares polynomial fit to a surface |

|

Evaluate 2D polynomial coefficients |

|

Interpolate 2D data using polynomial regression (global) |

Classes¶

|

Fits and evaluates polynomials in N-dimensions. |

Class Inheritance Diagram¶

digraph inheritance812590e9a1 { bgcolor=transparent; rankdir=LR; size=""; "Model" [URL="../../../api/sofia_redux.toolkit.utilities.base.Model.html#sofia_redux.toolkit.utilities.base.Model",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Base model Class for fitting N-dimensional data"]; "Polyfit" [URL="../../../api/sofia_redux.toolkit.fitting.polynomial.Polyfit.html#sofia_redux.toolkit.fitting.polynomial.Polyfit",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Fits and evaluates polynomials in N-dimensions."]; "Model" -> "Polyfit" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; }sofia_redux.toolkit.image.adjust Module¶

Functions¶

|

Shift an image by the specified amount. |

|

Rotate an image. |

|

Rebins an array to new shape |

|

Shifts an image by x and y offsets |

|

Replicates IDL rotate function |

|

Un-rotates an image using IDL style rotation types |

|

Return the pixel offset between an image and a reference |

|

Upsampled DFT by matrix multiplication. |

sofia_redux.toolkit.image.coadd Module¶

Functions¶

|

Coadd total intensity or spectral images. |

sofia_redux.toolkit.image.combine Module¶

Functions¶

|

Combine input image arrays. |

sofia_redux.toolkit.image.fill Module¶

Functions¶

|

|

|

|

|

Interpolates over image using a mask. |

|

Fills in NaN values in an image |

|

Clip a polygon to a square unit pixel |

|

Finds all pixels at least partially inside a specified polygon |

|

Uses the shoelace method to calculate area of a polygon |

|

Get pixel weights - depreciated by polyfillaa |

sofia_redux.toolkit.image.resize Module¶

Functions¶

|

Replacement for |

sofia_redux.toolkit.image.smooth Module¶

Functions¶

|

Quick and simple cubic polynomial fit to surface - no checks |

|

Returns the coefficients necessary for bicubic interpolation. |

|

|

|

Fit a plane to distribution of points. |

|

Fits a smooth surface to data using J. |

sofia_redux.toolkit.image.utilities Module¶

Functions¶

|

Convert from |

|

Allow translation of modes for scipy versions >= 1.6.0 |

|

Clip the array to the range of original values. |

|

A drop in replacement for |

sofia_redux.toolkit.image.warp Module¶

Functions¶

|

Warp data using transformation defined by two sets of coordinates |

|

Performs polynomial spatial warping |

|

Warp an image by mapping 2 coordinate sets with a polynomial transform. |

|

Check if a transform is homographic. |

|

Apply a metric transform to the supplied coordinates. |

|

Apply coefficients to polynomial terms. |

|

Estimate the polynomial transform for (x, y) coordinates. |

|

Apply the warping between two sets of coordinates to another. |

|

Warp the indices of an array with a given shape using a polynomial. |

|

Warp an n-dimensional image according to a given coordinate transform. |

Classes¶

|

Initialize a polynomial transform. |

Class Inheritance Diagram¶

digraph inheritance2c28666683 { bgcolor=transparent; rankdir=LR; size=""; "ABC" [URL="https://docs.python.org/3/library/abc.html#abc.ABC",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Helper class that provides a standard way to create an ABC using"]; "PolynomialTransform" [URL="../../../api/sofia_redux.toolkit.image.warp.PolynomialTransform.html#sofia_redux.toolkit.image.warp.PolynomialTransform",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top"]; "ABC" -> "PolynomialTransform" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; }sofia_redux.toolkit.interpolate.interpolate Module¶

Functions¶

|

Shift an equally spaced array of data values by an offset |

|

Interpolate values containing NaNs |

|

Perform cubic spline (tensioned) interpolation |

|

Perform a sinc interpolation on a data set |

|

Perform linear interpolation at a single point. |

|

Perform linear interpolation at a single point with error propagation. |

|

Perform linear interpolation of errors |

|

Propagate errors using Delaunay triangulation in N-dimensions |

|

Propagate errors using linear interpolation in N-dimensions |

|

Find the effective index of a function value in an ordered vector with NaN handling. |

|

Finds the effective index of a function value in an ordered array. |

Classes¶

|

Fast interpolation on a regular grid |

Class Inheritance Diagram¶

digraph inheritanceb8ee21f950 { bgcolor=transparent; rankdir=LR; size=""; "Interpolate" [URL="../../../api/sofia_redux.toolkit.interpolate.interpolate.Interpolate.html#sofia_redux.toolkit.interpolate.interpolate.Interpolate",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Fast interpolation on a regular grid"]; }sofia_redux.toolkit.resampling Package¶

Functions¶

|

Set certain arrays to a fixed size based on a mask array. |

|

Return the sum of an array. |

Returns distance weights based on offsets and scaled adaptive weighting. |

|

Returns distance weights based on offsets and shaped adaptive weighting. |

|

|

Returns a distance weighting based on coordinate offsets. |

Returns distance weights based on coordinate offsets and matrix operation. |

|

|

Calculate the final weighting factor based on errors and other weights. |

|

Defines a hyperrectangle edge around a coordinate distribution. |

|

Defines an edge based on statistical deviation from a sample distribution. |

|

Defines an ellipsoid edge around a coordinate distribution. |

|

Defines an edge based on the range of coordinates in each dimension. |

|

Determine whether a reference position is within a distribution "edge". |

|

Checks the sample distribution is suitable for a polynomial fit order. |

|

Checks maximum order for sample coordinates bounding a reference. |

|

Checks maximum order based only on the number of samples. |

|

Checks maximum order based on unique samples, irrespective of reference. |

|

Uses |

|

Converts a Python iterable to a Numba list for use in jitted functions. |

|

Calculate the covariance of a distribution. |

|

Returns the mean coordinate of a distribution. |

|

Calculates the inverse covariance matrix inverse of the fit coefficients. |

|

Return the weighted mean-square-cross-product (mscp) of sample derivatives. |

|

Return variance at each coordinate based on coordinate distribution. |

|

Calculates covariance matrix inverse of fit coefficients from mean error. |

|

Calculates the derivative of a polynomial at a single point. |

|

Calculates the derivative of a polynomial at multiple points. |

|

Fast 1-D integration using Trapezium method. |

|

Returns the dot product of phi and coefficients. |

|

Calculates variance given the polynomial terms of a coordinate. |

|

Calculates the residual of a polynomial fit to data. |

|

Evaluate a special case of the logistic function where f(x0) = 0.5. |

|

Evaluate the generalized logistic function. |

|

Derive polynomial terms for a coordinate set given polynomial exponents. |

|

Return values of a multivariate Gaussian in K-dimensional coordinates. |

|

Fill output arrays with set values on fit failure. |

|

Variance at reference coordinate derived from distribution uncertainty. |

|

Creates a mapping from polynomial exponents to derivatives. |

|

Define a set of polynomial exponents. |

|

Derive polynomial terms given coordinates and polynomial exponents. |

|

Returns the relative density of samples compared to a uniform distribution. |

|

ResamplePolynomial data using local polynomial fitting. |

|

ResamplePolynomial data using local polynomial fitting. |

|

Apply scaling factors and offsets to N-dimensional data. |

|

Applies the function |

|

Applies the function |

|

Applies the function |

|

Applies the function |

|

Wrapper for |

|

Scales a Gaussian weighting kernel based on a prior fit. |

|

Wrapper for |

|

Shape and scale the weighting kernel based on a prior fit. |

|

Evaluate a scaled and shifted logistic function. |

|

Derive polynomial terms for a single coordinate given polynomial exponents. |

|

Convenience function returning matrices suitable for linear algebra. |

|

Find least squares solution of Ax=B and rank of A. |

|

Solve for a fit at a single coordinate. |

|

Solve all fits within one intersection block. |

|

Inverse covariance matrices on fit coefficients from errors and residuals. |

|

Return the weighted mean of data, variance, and reduced chi-squared. |

|

Derive a polynomial fit from samples, then calculate fit at single point. |

|

Return the reduced chi-squared given residuals and sample errors. |

|

Return the reduced chi-squared given residuals and constant variance. |

|

Calculate the sum-of-squares-and-cross-products of a matrix. |

|

A sigmoid function used by the "shaped" adaptive resampling algorithm. |

|

Updates a mask, setting False values where weights are zero or non-finite. |

|

Determine the variance given offsets from the expected value. |

|

Calculate variance of a fit from the residuals of the fit to data. |

|

Calculate the weighted mean of a data set. |

|

Calculated mean weighted variance. |

|

Utility function to calculate the biased weighted variance. |

Classes¶

|

Define and initialize a resampling grid. |

|

Create a tree structure for use with the resampling algorithm. |

alias of |

|

|

Class to resample data using kernel convolution. |

|

Class to resample data using local polynomial fits. |

Class Inheritance Diagram¶

digraph inheritance5eb099603a { bgcolor=transparent; rankdir=LR; size=""; "BaseGrid" [URL="../../../api/sofia_redux.toolkit.resampling.BaseGrid.html#sofia_redux.toolkit.resampling.BaseGrid",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top"]; "BaseTree" [fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled"]; "PolynomialTree" [URL="../../../api/sofia_redux.toolkit.resampling.PolynomialTree.html#sofia_redux.toolkit.resampling.PolynomialTree",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top"]; "BaseTree" -> "PolynomialTree" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "ResampleBase" [fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled"]; "ResampleKernel" [URL="../../../api/sofia_redux.toolkit.resampling.ResampleKernel.html#sofia_redux.toolkit.resampling.ResampleKernel",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top"]; "ResampleBase" -> "ResampleKernel" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "ResamplePolynomial" [URL="../../../api/sofia_redux.toolkit.resampling.ResamplePolynomial.html#sofia_redux.toolkit.resampling.ResamplePolynomial",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top"]; "ResampleBase" -> "ResamplePolynomial" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; }sofia_redux.toolkit.stats.stats Module¶

Functions¶

|

Determines the outliers in a distribution of data |

|

(Robustly) averages arrays along arbitrary axes. |

|

Combines a data set using median |

|

Computes statistics on a data set avoiding deviant points if requested |

|

Computes a mask derived from data Median Absolute Deviation (MAD). |

sofia_redux.toolkit.utilities.base Module¶

Classes¶

|

Base model Class for fitting N-dimensional data |

Class Inheritance Diagram¶

digraph inheritanced18b721993 { bgcolor=transparent; rankdir=LR; size=""; "Model" [URL="../../../api/sofia_redux.toolkit.utilities.base.Model.html#sofia_redux.toolkit.utilities.base.Model",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Base model Class for fitting N-dimensional data"]; }sofia_redux.toolkit.utilities.fits Module¶

Functions¶

|

Insert or replace a keyword and value in the header |

|

Add HISTORY message to a FITS header before the pipeline. |

|

Make a function to add HISTORY messages to a header, prefixed with a string. |

|

Retrieve the data and header from a FITS file |

|

Returns the header of a FITS file |

|

Returns the data from a FITS file |

|

Convert a FITS header to an array of strings |

|

Convert an array of strings to a FITS header |

|

Returns the HDUList from a FITS file |

|

Write a HDULists to disk. |

|

Get a key value from a header. |

|

Context manager to temporarily set the log level. |

|

Order headers based on contents. |

|

Merge input headers. |

sofia_redux.toolkit.utilities.func Module¶

Functions¶

|

Check for 'truthy' values. |

|

Check for valid numbers. |

|

Returns list sorted in a human friendly manner |

|

Check if a file exists, and optionally if it has the correct permissions. |

|

Convert a header datestring to seconds |

|

Convert a string to an int or float. |

|

Returns a slice of an array in arbitrary dimension. |

|

Sets a value to a valid number type |

|

Gaussian model for curve_fit |

|

Broadcast an array to the desired shape. |

|

Recursively update a dictionary |

|

|

|

|

|

Taylor expansion generator for Polynomial exponents |

|

Convert a number of bytes to a string with correct suffix |

|

Remove any samples containing NaNs from sample points |

|

Return a byte array the same size as the input array. |

|

Generate a 2-D Julia fractal image |

|

Derive a mask to trim NaNs from an array |

|

Emulates the behaviour of np.nansum for NumPy versions <= 1.9.0. |

sofia_redux.toolkit.utilities.multiprocessing Module¶

Functions¶

|

Returns the maximum number of CPU cores available |

|

Return the actual number of cores to use for a given number of jobs. |

|

Return a valid number of jobs in the range 1 <= jobs <= max_cores. |

|

Process a series of tasks in serial, or in parallel using joblib. |

|

Pickle a object and save to the given filename. |

|

Unpickle a string argument if it is a file, and return the result. |

|

Pickle a list of objects to a temporary directory. |

|

Restore pickle files to objects in-place. |

Return whether the process is running in the main thread. |

|

|

Context manager to temporarily log messages for unique processes/threads |

|

Context manager to output log messages during multiprocessing. |

|

Remove all temporary logging files/directories and handle log records. |

|

Return the results of the function in multitask and save log records. |

|

Store the log records in a pickle file rather than emitting. |

|

Wrap a function for use with |

Classes¶

A log handler for multitask. |

Class Inheritance Diagram¶

digraph inheritance7a3b669de2 { bgcolor=transparent; rankdir=LR; size=""; "Filterer" [fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",tooltip="A base class for loggers and handlers which allows them to share"]; "Handler" [URL="https://docs.python.org/3/library/logging.html#logging.Handler",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Handler instances dispatch logging events to specific destinations."]; "Filterer" -> "Handler" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "MultitaskHandler" [URL="../../../api/sofia_redux.toolkit.utilities.multiprocessing.MultitaskHandler.html#sofia_redux.toolkit.utilities.multiprocessing.MultitaskHandler",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="A log handler for multitask."]; "Handler" -> "MultitaskHandler" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; }sofia_redux.spectroscopy¶

sofia_redux.spectroscopy.binspec Module¶

Functions¶

|

Bin a spectrum between lmin and lmax with bins delta wide |

sofia_redux.spectroscopy.earthvelocity Module¶

Functions¶

|

Find the radial LSR velocity towards sky coordinates. |

|

Calculate the Cartesian velocity of the Sun. |

|

Provide velocities of the Earth towards a celestial position. |

sofia_redux.spectroscopy.extspec Module¶

Functions¶

|

Fit background to a single column. |

|

Extracts spectra from a rectified spectral image. |

sofia_redux.spectroscopy.findapertures Module¶

Functions¶

|

Determine the position of the aperture(s) in a spatial profile. |

sofia_redux.spectroscopy.fluxcal Module¶

Functions¶

|

Get pixel shift between flux and correction curve. |

|

Calibrate and telluric correct spectral flux. |

sofia_redux.spectroscopy.getapertures Module¶

Functions¶

|

Determine aperture radii for extraction. |

sofia_redux.spectroscopy.getspecscale Module¶

Functions¶

|

Determines the scale factors for a _stack of spectra |

sofia_redux.spectroscopy.mkapmask Module¶

Functions¶

|

Constructs a 2D aperture mask. |

sofia_redux.spectroscopy.mkspatprof Module¶

Functions¶

|

Construct average spatial profiles. |

sofia_redux.spectroscopy.radvel Module¶

Functions¶

|

Calculate the expected extrinsic radial velocity wavelength shift. |

sofia_redux.spectroscopy.readflat Module¶

Functions¶

|

Reads a Spextool flat field FITS image |

sofia_redux.spectroscopy.readwavecal Module¶

Functions¶

|

Read a Spextool wavecal file |

sofia_redux.spectroscopy.rectify Module¶

Functions¶

|

Construct average spatial profiles over multiple orders |

sofia_redux.spectroscopy.rectifyorder Module¶

Functions¶

|

Given arrays of x and y coordinates, interpolate to defined grids |

|

Trim rows and columns from the edges of the coordinate arrays. |

|

Construct average spatial profiles for a single order |

|

Update a FITS header with spectral WCS information. |

|

Construct average spatial profiles for a single order |

sofia_redux.spectroscopy.smoothres Module¶

Functions¶

|

Smooth a data to a constant resolution |

sofia_redux.spectroscopy.tracespec Module¶

Functions¶

|

Trace spectral continua in a spatially/spectrally rectified image. |

sofia_redux.calibration¶

sofia_redux.calibration.pipecal_applyphot Module¶

Calculate aperture photometry and update FITS header.

Functions¶

|

Calculate photometry on a FITS image and store results to FITS header. |

sofia_redux.calibration.pipecal_calfac Module¶

Calculate a calibration factor from a standard flux value.

Functions¶

|

Calculate the calibration factor for a flux standard. |

sofia_redux.calibration.pipecal_config Module¶

Calibration configuration.

Functions¶

|

Parse all reference files and return appropriate configuration values. |

|

Read response files. |

sofia_redux.calibration.pipecal_fitpeak Module¶

Fit a 2D function to an image.

Functions¶

|

Function for an elliptical Gaussian profile. |

|

Function for an elliptical Lorentzian profile. |

|

Function for an elliptical Moffat profile. |

|

Fit a peak profile to a 2D image. |

sofia_redux.calibration.pipecal_photometry Module¶

Fit a source and perform aperture photometry.

Functions¶

|

Perform aperture photometry and profile fits on image data. |

sofia_redux.calibration.pipecal_rratio Module¶

Calculate response ratio for atmospheric correction.

Functions¶

|

Calculate the R ratio for a given ZA and Altitude or PWV. |

sofia_redux.calibration.pipecal_util Module¶

Utility and convenience functions for common pipecal use cases.

Functions¶

|

Robust average of zenith angle from FITS header. |

|

Robust average of altitude from FITS header. |

|

Robust average of precipitable water vapor from FITS header. |

|

Estimate the position of a standard source in the image. |

|

Add calibration-related keywords to a header. |

|

Add photometry-related keywords to a header. |

|

Retrieve a flux calibration factor from configuration. |

|

Apply a flux calibration factor to an image. |

|

Retrieve a telluric correction factor from configuration. |

|

Apply a telluric correction factor to an image. |

|

Run photometry on an image of a standard source. |

sofia_redux.calibration.pipecal_error Module¶

Base class for pipecal errors.

Classes¶

A ValueError raised by pipecal functions. |

sofia_redux.visualization¶

sofia_redux.visualization.quicklook Module¶

Functions¶

|

Generate a map image from a FITS file. |

|

Generate a plot of spectral data. |

sofia_redux.visualization.redux_viewer Module¶

Classes¶

Redux Viewer interface to the Eye of SOFIA. |

Class Inheritance Diagram¶

digraph inheritance28e1a7d94e { bgcolor=transparent; rankdir=LR; size=""; "EyeViewer" [URL="../../../api/sofia_redux.visualization.redux_viewer.EyeViewer.html#sofia_redux.visualization.redux_viewer.EyeViewer",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Redux Viewer interface to the Eye of SOFIA."]; "Viewer" -> "EyeViewer" [arrowsize=1.2,arrowtail=empty,dir=back,style="setlinewidth(0.5)"]; "Viewer" [URL="../../../api/sofia_redux.pipeline.viewer.Viewer.html#sofia_redux.pipeline.viewer.Viewer",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Parent class for Redux data viewers."]; }sofia_redux.visualization.controller Module¶

Standalone front-end for Eye of SOFIA display tool.

Functions¶

|

The Eye of SOFIA spectral viewer. |

|

Parse command line arguments. |

|

Check arguments for validity. |

sofia_redux.visualization.eye Module¶

Classes¶

|

Run the Eye of SOFIA. |

Class Inheritance Diagram¶

digraph inheritancebb54a4d2b2 { bgcolor=transparent; rankdir=LR; size=""; "Eye" [URL="../../../api/sofia_redux.visualization.eye.Eye.html#sofia_redux.visualization.eye.Eye",fillcolor=white,fontname="Vera Sans, DejaVu Sans, Liberation Sans, Arial, Helvetica, sans",fontsize=10,height=0.25,margin=0.25,shape=box,style="setlinewidth(0.5),filled",target="_top",tooltip="Run the Eye of SOFIA."]; }sofia_redux.pipeline¶

The Redux application programming interface (API), including the FORCAST

interface classes, are documented in the sofia_redux.pipeline package.

Appendix A: Pipeline Recipe¶

This JSON document is the black-box interface specification for the FORCAST Redux pipeline, as defined in the Pipetools-Pipeline ICD.

{

"inputmanifest" : "infiles.txt",

"outputmanifest" : "outfiles.txt",

"env" : {

"DPS_PYTHON": "$DPS_SHARE/share/anaconda3/envs/forcast/bin"

},

"knobs" : {

"REDUX_CONFIG" : {

"desc" : "Redux parameter file containing custom configuration.",

"type" : "string",

"default": "None"

}

},

"command" : "$DPS_PYTHON/redux_pipe infiles.txt -c $DPS_REDUX_CONFIG"

}