HAWC+ DRP User’s Manual¶

Introduction¶

The SI Pipeline User’s Manual (OP10) is intended for use by both SOFIA Science Center staff during routine data processing and analysis, and also as a reference for Guest Observers (GOs) and archive users to understand how the data in which they are interested was processed. This manual is intended to provide all the needed information to execute the SI data reduction pipeline, and assess the data quality of the resulting products. It will also provide a description of the algorithms used by the pipeline and both the final and intermediate data products.

A description of the current pipeline capabilities, testing results, known issues, and installation procedures are documented in the SI Pipeline Software Version Description Document (SVDD, SW06, DOCREF). The overall Verification and Validation (V&V) approach can be found in the Data Processing System V&V Plan (SV01-2232). Both documents can be obtained from the SOFIA document library in Windchill.

This manual applies to HAWC DRP version 3.2.0.

SI Observing Modes Supported¶

HAWC+ Instrument Information¶

HAWC+ is the upgraded and redesigned incarnation of the High-Resolution Airborne Wide-band Camera instrument (HAWC), built for SOFIA. Since the original design never collected data for SOFIA, the instrument may be alternately referred to as HAWC or HAWC+. HAWC+ is designed for far-infrared imaging observations in either total intensity (imaging) or polarimetry mode.

HAWC currently consists of dual TES BUG Detector arrays in a 64x40 rectangular format. A six-position filter wheel is populated with five broadband filters ranging from 40 to 250 \(\mu\)m and a dedicated position for diagnostics. Another wheel holds pupil masks and rotating half-wave plates (HWPs) for polarization observations. A polarizing beam splitter directs the two orthogonal linear polarizations to the two detectors (the reflected (R) array and the transmitted (T) array). Each array was designed to have two 32x40 subarrays, for four total detectors (R0, R1, T0, and T1), but T1 is not currently available for HAWC. Since polarimetry requires paired R and T pixels, it is currently only available for the R0 and T0 arrays. Total intensity observations may use the full set of 3 subarrays.

HAWC+ Observing Modes¶

The HAWC instrument has two instrument configurations, for imaging and polarization observations. In both types of observations, removing background flux due to the telescope and sky is a challenge that requires one of several observational strategies. The HAWC instrument may use the secondary mirror to chop rapidly between two positions (source and sky), may use discrete telescope motions to nod between different sky positions, or may use slow continuous scans of the telescope across the desired field. In chopping and nodding strategies, sky positions are subtracted from source positions to remove background levels. In scanning strategies, the continuous stream of data is used to solve for the underlying source and background structure.

The instrument has two standard observing modes for imaging: the Chop-Nod instrument mode combines traditional chopping with nodding; the Scan mode uses slow telescope scans without chopping. The Scan mode is the most commonly used for total intensity observations. Likewise, polarization observations may be taken in either Nod-Pol or Scan-Pol mode. Nod-Pol mode includes chopping and nodding cycles in multiple HWP positions; Scan-Pol mode includes repeated scans at multiple HWP positions.

All modes that include chopping or nodding may be chopped and nodded on-chip or off-chip. Currently, only two-point chop patterns with matching nod amplitudes (nod-match-chop) are used in either Chop-Nod or Nod-Pol observations, and nodding is performed in an A-B-B-A pattern only. All HAWC modes can optionally have a small dither pattern or a larger mapping pattern, to cover regions of the sky larger than HAWC’s fields of view. Scanning patterns may be either box rasters or Lissajous patterns.

Algorithm Description¶

Chop-Nod and Nod-Pol Reduction Algorithms¶

The following sections describe the major algorithms used to reduce Chop-Nod and Nod-Pol observations. In nearly every case, Chop-Nod (total intensity) reductions use the same methods as Nod-Pol observations, but either apply the algorithm to the data for the single HWP angle available, or else, if the step is specifically for polarimetry, have no effect when called on total intensity data. Since nearly all total intensity HAWC observations are taken with scanning mode, the following sections will focus primarily on Nod-Pol data.

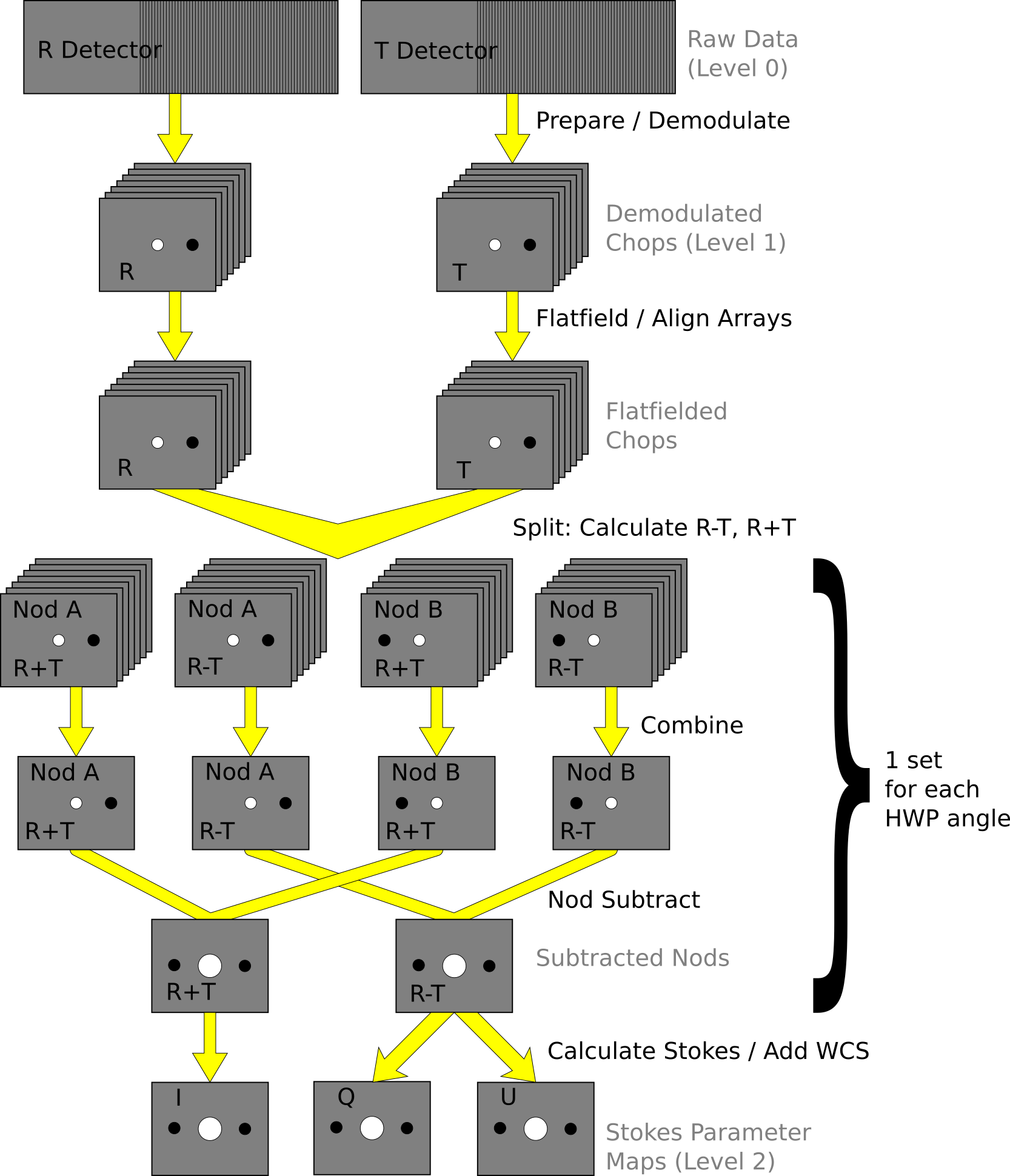

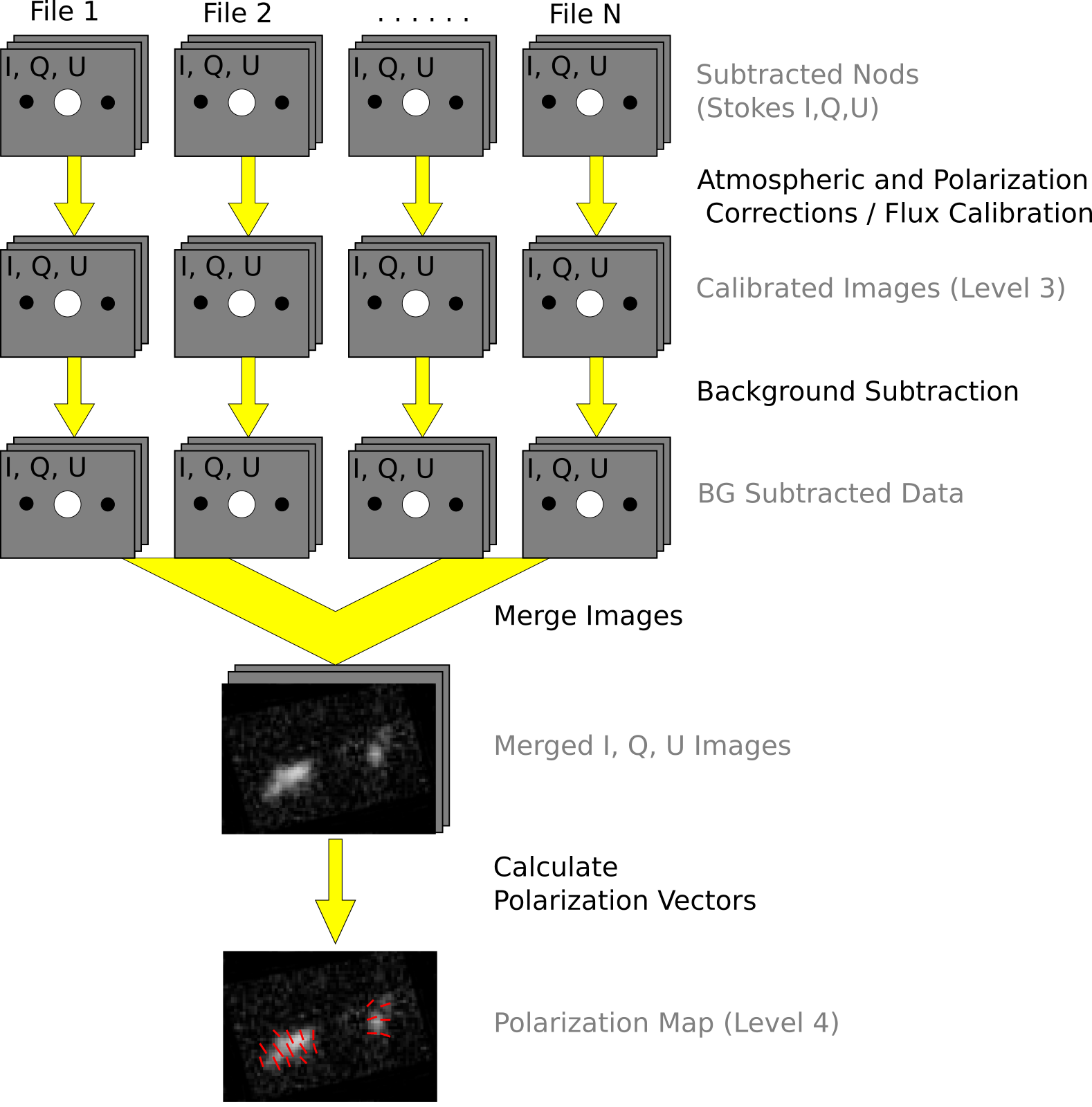

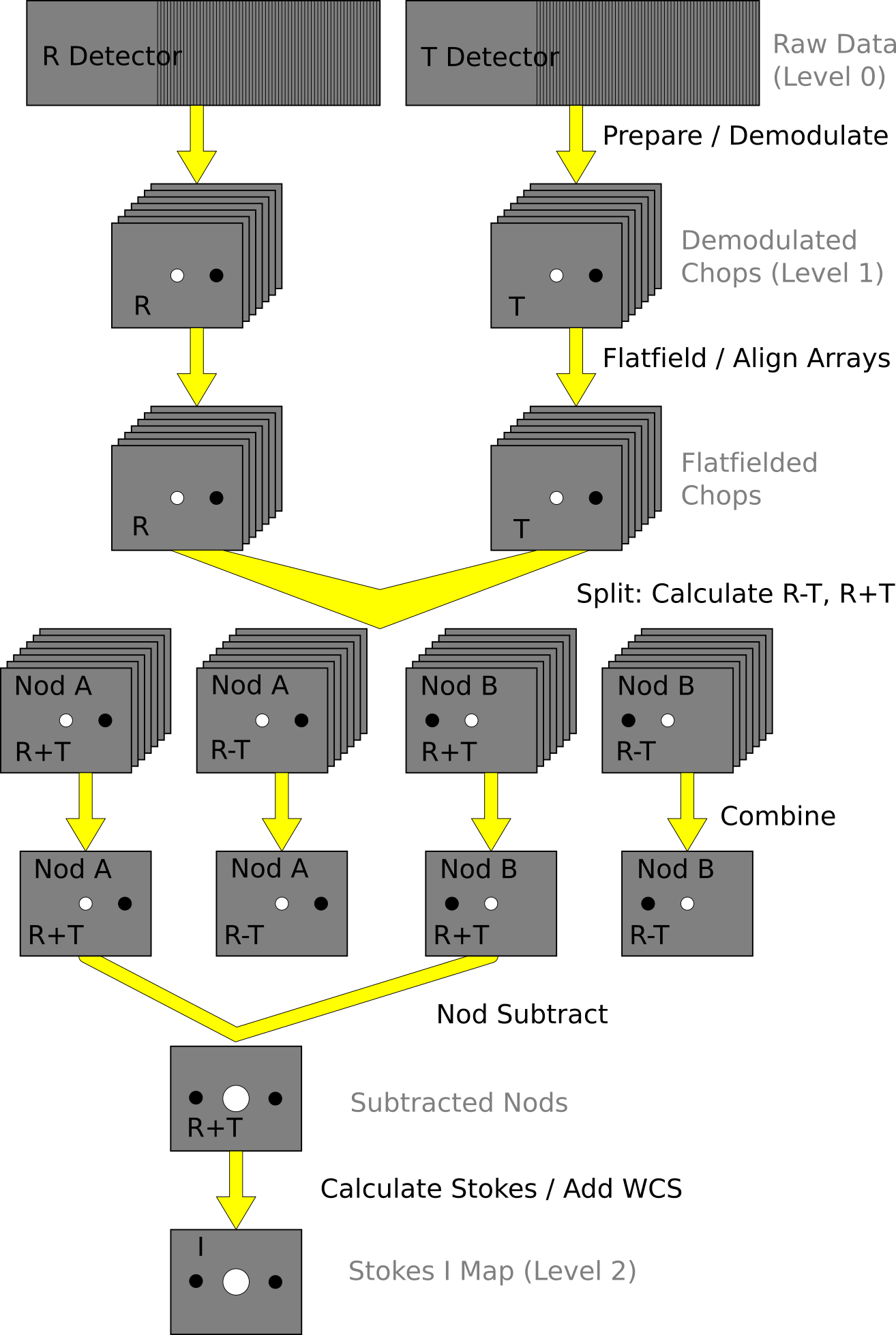

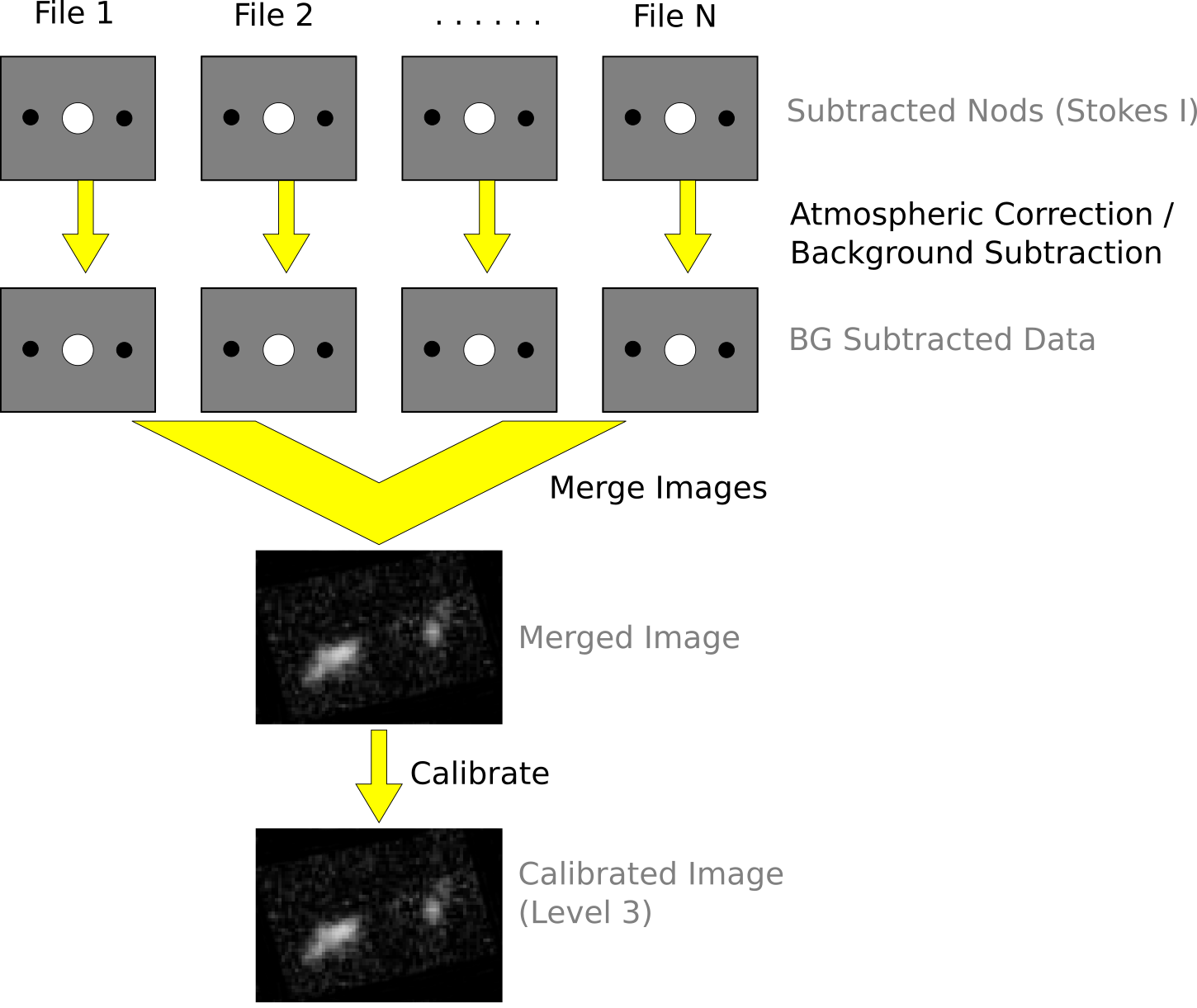

See the figures below for flow charts that illustrate the data reduction process for Nod-Pol data (Fig. 100 and Fig. 101) and Chop-Nod data (Fig. 102 and Fig. 103).

Fig. 100 Nod-Pol data reduction flowchart, up through Stokes parameter calculation for a single input file.¶

Fig. 101 Nod-Pol data reduction flowchart, picking up from Stokes parameter calculation, through combining multiple input files and calculating polarization vectors.¶

Fig. 102 Chop-Nod data reduction flowchart, up through Stokes parameter calculation for a single input file.¶

Fig. 103 Chop-Nod data reduction flowchart, picking up from Stokes parameter calculation, through combining multiple input files.¶

Prepare¶

The first step in the pipeline is to prepare the raw data for processing, by rearranging and regularizing the raw input data tables, and performing some initial calculations required by subsequent steps.

The raw (Level 0) HAWC files contain all information in FITS binary table extensions located in two Header Data Unit (HDU) extensions. The raw file includes the following HDUs:

Primary HDU: Contains the necessary FITS keywords in the header but no data. It contains all required keywords for SOFIA data files, plus all keywords required to reduce or characterize the various observing modes. Extra keywords (either from the SOFIA keyword dictionary or otherwise) have been added for human parsing.

CONFIGURATION HDU (EXTNAME = CONFIGURATION): Contains MCE (detector electronics) configuration data. This HDU is stored only in the raw and demodulated files; it is not stored in Level 2 or higher data products. Nominally, it is the first HDU but users should use EXTNAME to identify the correct HDUs. Note, the “HIERARCH” keyword option and long strings are used in this HDU. All keyword names are prefaced with “MCEn” where n=0,1,2,3. Only the header is used from this HDU.

TIMESTREAM Data HDU (EXTNAME = TIMESTREAM): Contains a binary table with data from all detectors, with one row for each time sample. The raw detector data is stored in the column “SQ1Feedback”, in FITS (data-store) indices, i.e. 41 rows and 128 columns. Columns 0-31 are for subarray R0, 32-63 for R1, 64-95 for T0 and 96-127 for T1). Additional columns contain other important data and metadata, including time stamps, instrument encoder readings, chopper signals, and astrometry data.

In order to begin processing the data, the pipeline first splits these input TIMESTREAM data arrays into separate R and T tables. It will also compute nod and chop offset values from telescope data, and may also delete, rename, or replace some input columns in order to format them as expected by later algorithms. The output data from this step has the same HDU structure as the input data, but the detector data is now stored in the “R Array” and “T Array” fields, which have 41 rows and 64 columns each.

Demodulate¶

For both Chop-Nod and Nod-Pol instrument modes, data is taken in a two-point chop cycle. In order to combine the data from the high and low chop positions, the pipeline demodulates the raw time stream with either a square or sine wave-form. Throughout this step, data for each of the R and T arrays are handled separately. The process is equivalent to identifying matched sets of chopped images and subtracting them.

During demodulation, a number of filtering steps are performed to identify good data. By default, the raw data is first filtered with a box high-pass filter with a time constant of one over the chop frequency. Then, any data taken during telescope movement (line-of-sight rewinds, for example, or tracking errors) is flagged for removal. In square wave demodulation, samples are then tagged as being in the high-chop state, low-chop state, or in between (not used). For each complete chop cycle within a single nod position at a single HWP angle, the pipeline computes the average of the signal in the high-chop state and subtracts it from the average of the signal in the low-chop state. Incomplete chop cycles at the end of a nod or HWP position are discarded. The sine-wave demodulation proceeds similarly, except that the data are weighted by a sine wave instead of being considered either purely high or purely low state.

During demodulation, the data is also corrected for the phase delay in the readout of each pixel, relative to the chopper signal. For square wave demodulation, the phase delay time is multiplied by the sample frequency to calculate the delay in data samples for each individual pixel. The data is then shifted by that many samples before demodulating. For sine wave demodulation, the phase delay time is multiplied with 2\(\pi\) times the chop frequency to get the phase shift of the demodulating wave-form in radians.

Alongside the chop-subtracted flux, the pipeline calculates the error on the raw data during demodulation. It does so by taking the mean of all data samples at the same chop phase, nod position, HWP angle, and detector pixel, then calculates the variance of each raw data point with respect to the appropriate mean. The square root of this value gives the standard deviation of the raw flux. The pipeline will propagate these calculated error estimates throughout the rest of the data reduction steps.

The result of the demodulation process is a chop-subtracted, time-averaged flux value and associated variance for each nod position, HWP angle, and detector pixel. The output is stored in a new FITS table, in the extension called DEMODULATED DATA, which replaces the TIMESTREAM data extension. The CONFIGURATION extension is left unmodified.

Flat Correct¶

After demodulation, the pipeline corrects the data for pixel-to-pixel gain variations by applying a flat field correction. Flat files are generated on the fly from internal calibrator files (CALMODE=INT_CAL), taken before and after each set of science data. Flat files contain normalized gains for the R and T array, so that they are corrected to the same level. Flat files also contain associated variances and a bad pixel mask, with zero values indicating good pixels and any other value indicating a bad pixel. Pixels marked as bad are set to NaN in the gain data. To apply the gain correction and mark bad pixels, the pipeline multiplies the R and T array data by the appropriate flat data. Since the T1 subarray is not available, all pixels in the right half of the T array are marked bad at this stage. The flat variance values are also propagated into the data variance planes.

The output from this step contains FITS images in addition to the data tables. The R array data is stored as an image in the primary HDU; the R array variance, T array data, T array variance, R bad pixel mask, and T bad pixel mask are stored as images in extensions 1 (EXTNAME=”R ARRAY VAR”), 2 (EXTNAME=”T ARRAY”), 3 (EXTNAME=”T ARRAY VAR”), 4 (EXTNAME=”R BAD PIXEL MASK”), and 5 (EXTNAME=”T BAD PIXEL MASK”), respectively. The DEMODULATED DATA table is attached unmodified as extension 6. The R and T data and variance images are 3D cubes, with dimension 64x41xN\(_{frame}\), where N\(_{frame}\) is the number of nod positions in the observation, times the number of HWP positions.

Align Arrays¶

In order to correctly pair R and T pixels for calculating polarization, and to spatially align all subarrays, the pipeline must reorder the pixels in the raw images. The last row is removed, R1 and T1 subarray images (columns 32-64) are rotated 180 degrees, and then all images are inverted along the y-axis. Small shifts between the R0 and T0 and R1 and T1 subarrays may also be corrected for at this stage. The spatial gap between the 0 and 1 subarrays is also recorded in the ALNGAPX and ALNGAPY FITS header keywords, but is not added to the image; it is accounted for in a later resampling of the image. The output images are 64x40xN\(_{frame}\).

Split Images¶

To prepare for combining nod positions and calculating Stokes parameters, the pipeline next splits the data into separate images for each nod position at each HWP angle, calculates the sum and difference of the R and T arrays, and merges the R and T array bad pixel masks. The algorithm uses data from the DEMODULATED DATA table to distinguish the high and low nod positions and the HWP angle. At this stage, any pixel for which there is a good pixel in R but not in T, or vice versa, is noted as a “widow pixel.” In the sum image (R+T), each widow pixel’s flux is multiplied by 2 to scale it to the correct total intensity. In the merged bad pixel mask, widow pixels are marked with the value 1 (R only) or 2 (T only), so that later steps may handle them appropriately.

The output from this step contains a large number of FITS extensions: DATA and VAR image extensions for each of R+T and R-T for each HWP angle and nod position, a VAR extension for uncombined R and T arrays at each HWP angle and nod position, as well as a TABLE extension containing the demodulated data for each HWP angle and nod position, and a single merged BAD PIXEL MASK image. For a typical Nod-Pol observation with two nod positions and four HWP angles, there are 8 R+T images, 8 R-T images, 32 variance images, 8 binary tables, and 1 bad pixel mask image, for 57 extensions total, including the primary HDU. The output images, other than the bad pixel mask, are 3D cubes with dimension 64x40xN\(_{chop}\), where N\(_{chop}\) is the number of chop cycles at the given HWP angle.

Combine Images¶

The pipeline combines all chop cycles at a given nod position and HWP angle by computing a robust mean of all the frames in the R+T and R-T images. The robust mean is computed at each pixel using Chauvenet’s criterion, iteratively rejecting pixels more than 3\(\sigma\) from the mean value, by default. The associated variance values are propagated through the mean, and the square root of the resulting value is stored as an error image in the output.

The output from this step contains the same FITS extensions as in the previous step, with all images now reduced to 2D images with dimensions 64x40, and the variance images for R+T and R-T replaced with ERROR images. For the example above, with two nod positions and four HWP angles, there are still 57 total extensions, including the primary HDU.

Subtract Beams¶

In this pipeline step, the sky nod positions (B beams) are subtracted from the source nod positions (A beams) at each HWP angle and for each set of R+T and R-T, and the resulting flux is divided by two for normalization. The errors previously calculated in the combine step are propagated accordingly. The output contains extensions for DATA and ERROR images for each set, as well as variance images for R and T arrays, a table of demodulated data for each HWP angle, and the bad pixel mask.

Compute Stokes¶

From the R+T and R-T data for each HWP angle, the pipeline now computes images corresponding to the Stokes I, Q, and U parameters for each pixel.

Stokes I is computed by averaging the R+T signal over all HWP angles:

where \(N\) is the number of HWP angles and \((R+T)_{\phi}\) is the summed R+T flux at the HWP angle \(\phi\). The associated uncertainty in I is propagated from the previously calculated errors for R+T:

In the most common case of four HWP angles at 0, 45, 22.5, and 67.5 degrees, Stokes Q and U are computed as:

where \((R-T)_{\phi}\) is the differential R-T flux at the HWP angle \(\phi\). Uncertainties in Q and U are propagated from the input error values on R-T:

Since Stokes I, Q, and U are derived from the same data samples, they will have non-zero covariance. For later use in error propagation, the pipeline now calculates the covariance between Q and I (\(\sigma_{QI}\)) and U and I (\(\sigma_{UI}\)) from the variance in R and T as follows:

The covariance between Q and U (\(\sigma_{QU}\)) is zero at this stage, since they are derived from data for different HWP angles.

The output from this step contains an extension for the flux and error of each Stokes parameter, as well as the covariance images, bad pixel mask, and a table of the demodulated data, with columns from each of the HWP angles merged. The STOKES I flux image is in the primary HDU. For Nod-Pol data, there will be 10 additional extensions (ERROR I, STOKES Q, ERROR Q, STOKES U, ERROR U, COVAR Q I, COVAR U I, COVAR Q U, BAD PIXEL MASK, TABLE DATA). For Chop-Nod imaging, only Stokes I is calculated, so there are only 3 additional extensions (ERROR I, BAD PIXEL MASK, TABLE DATA).

Update WCS¶

To associate the pixels in the Stokes parameter image with sky coordinates, the pipeline uses FITS header keywords describing the telescope position to calculate the reference right ascension and declination (CRVAL1/2), the pixel scale (CDELT1/2), and the rotation angle (CROTA2). It may also correct for small shifts in the pixel corresponding to the instrument boresight, depending on the filter used, by modifying the reference pixel (CRPIX1/2). These standard FITS world coordinate system (WCS) keywords are written to the header of the primary HDU.

Subtract Instrumental Polarization¶

The instrument and the telescope itself may introduce some foreground polarization to the data which must be removed to determine the polarization from the astronomical source. The instrument team uses measurements of the sky to characterize the introduced polarization in reduced Stokes parameters (\(q=Q/I\) and \(u=U/I\)) for each filter band at each pixel. The correction is then applied as

and propagated to the associated error and covariance images as

The correction is expected to be good to within \(Q/I < 0.6\%\) and \(U/I < 0.6\%\).

Rotate Polarization Coordinates¶

The Stokes Q and U parameters, as calculated so far, reflect polarization angles measured in detector coordinates. After the foreground polarization is removed, the parameters may then be rotated into sky coordinates. The pipeline calculates a relative rotation angle, \(\alpha\), that accounts for the vertical position angle of the instrument, the initial angle of the half-wave plate position, and an offset position that is different for each HAWC filter. It applies the correction to the Q and U images with a standard rotation matrix, such that:

The errors and covariances become:

Correct for Atmospheric Opacity¶

In order to combine images taken under differing atmospheric conditions, the pipeline corrects the flux in each individual file for the estimated atmospheric transmission during the observation, based on the altitude and zenith angle at the time when the observation was obtained.

Atmospheric transmission values in each HAWC+ filter have been computed for a range of telescope elevations and observatory altitudes (corresponding to a range of overhead precipitable water vapor values) using the ATRAN atmospheric modeling code, provided to the SOFIA program by Steve Lord. The ratio of the transmission at each altitude and zenith angle, relative to that at the reference altitude (41,000 feet) and reference zenith angle (45 degrees), has been calculated for each filter and fit with a low-order polynomial. The ratio appropriate for the altitude and zenith angle of each observation is calculated from the fit coefficients. The pipeline applies this relative opacity correction factor directly to the flux in the Stokes I, Q, and U images, and propagates it into the corresponding error and covariance images.

Calibrate Flux¶

The pipeline now converts the flux units from instrumental counts to physical units of Jansky per pixel (Jy/pixel). For each filter band, the instrument team determines a calibration factor in counts/Jy/pixel appropriate to data that has been opacity-corrected to the reference zenith angle and altitude.

The calibration factors are computed in a manner similar to that for another SOFIA instrument (FORCAST), taking into account that HAWC+ is a bolometer, not a photon-counting device. Measured photometry is compared to the theoretical fluxes of objects (standards) whose spectra are assumed to be known. The predicted fluxes in each HAWC+ passband are computed by multiplying the model spectrum by the overall response curve of the telescope and instrument system and integrating over the filter passband. For HAWC+, the standards used to date include Uranus, Neptune, Ceres, and Pallas. The models for Uranus and Neptune were obtained from the Herschel project (see Mueller et al. 2016). Standard thermal models are used for Ceres and Pallas. All models are scaled to match the distances of the objects at the time of the observations. Calibration factors computed from these standards are then corrected by a color correction factor based on the mean and pivot wavelengths of each passband, such that the output flux in the calibrated data product is that of a nominal, flat spectrum source at the mean wavelength for the filter. See the FORCAST GO Handbook, available from the SOFIA webpage, for more details on the calibration process.

Raw calibration factors are computed as above by the pipeline, for any observation marked as a flux standard (OBSTYPE=STANDARD_FLUX), and are stored in the FITS headers of the output data product. The instrument team generally combines these factors across a flight series, to determine a robust average value for each instrument configuration and mode. The overall calibration thus determined is expected to be good to within about 10%.

For science observations, the series-average calibration factor is directly applied to the flux in each of the Stokes I, Q, and U images, and to their associated error and covariance images:

where f is the reference calibration factor. The systematic error on f is not propagated into the error planes, but it is stored in the ERRCALF FITS header keyword. The calibration factor applied is stored in the CALFCTR keyword.

Note that for Chop-Nod imaging data, this factor is applied after the merge step, below.

Subtract Background¶

After chop and nod subtraction, some residual background noise may remain in the flux images. After flat correction, some residual gain variation may remain as well. To remove these, the pipeline reads in all images in a reduction group, and then iteratively performs the following steps:

Smooth and combine the input Stokes I, Q, and U images

Compare each Stokes I image (smoothed) to the combined map to determine any background offset or scaling

Subtract the offset from the input (unsmoothed) Stokes I images; scale the input Stokes I, Q, and U images

Compare each smoothed Stokes Q and U images to the combined map to determine any additional background offset

Subtract the Q and U offsets from the input Q and U images

The final determined offsets (\(a_I, a_Q, a_U\)) and scales (\(b\)) for each file are applied to the flux for each Stokes image as follows:

and are propagated into the associated error and covariance images appropriately.

Rebin Images¶

In polarimetry, it is sometimes useful to bin several pixels together to increase signal-to-noise, at the cost of decreased resolution. The chop-nod pipeline provides an optional step to perform this binning on individual images, prior to merging them together into a single map.

The Stokes I, Q, and U images are divided into blocks of a specified bin width, then each block is summed over. The summed flux is scaled to account for missing pixels within the block, by the factor:

where \(n_{pix}\) is the number of pixels in a block, and \(n_{valid}\) is the number of valid pixels within the block. The error and covariance images are propagated to match. The WCS keywords in the FITS header are also updated to match the new data array.

By default, no binning is performed by the pipeline. The additional processing is generally performed only on request for particular science cases.

Merge Images¶

All steps up until this point produce an output file for each input file taken at each telescope dither position, without changing the pixelization of the input data. To combine files taken at separate locations into a single map, the pipeline resamples the flux from each onto a common grid, defined such that North is up and East is to the left. First, the WCS from each input file is used to determine the sky location of all the input pixels. Then, for each pixel in the output grid, the algorithm considers all input pixels within a given radius that are not marked as bad pixels. It weights the input pixels by a Gaussian function of their distance from the output grid point and, optionally, their associated errors. The value at the output grid pixel is the weighted average of the input pixels within the considered window. The output grid may subsample the input pixels: by default, there are 4 output pixels for each input pixel. For flux conservation, the output flux is multiplied by the ratio of the output pixel area to the input pixel area.

The error maps output by this algorithm are calculated from the input variances for the pixels involved in each weighted average. That is, the output fluxes from N input pixels are:

and the output errors and covariances are

where \(w_i\) is the pixel weight and \(w_{tot}\) is the sum of the weights of all input pixels.

As of HAWC DRP v2.4.0, the distance-weighted input pixels within the fit radius may optionally be fit by a low-order polynomial surface, rather than a weighted average. In this case, each output pixel value is the value of the local polynomial fit, evaluated at that grid location. Errors and covariances are propagated similarly.

The output from this step is a single FITS file, containing a flux and error image for each of Stokes I, Q, and U, as well as the Stokes covariance images. An image mask is also produced, which represents how many input pixels went into each output pixel. Because of the weighting scheme, the values in this mask are not integers. A data table containing demodulated data merged from all input tables is also attached to the file with extension name MERGED DATA.

Compute Vectors¶

Using the Stokes I, Q, and U images, the pipeline now computes the polarization percentage (\(p\)) and angle (\(\theta\)) and their associated errors (\(\sigma\)) in the standard way. For the polarization angle \(\theta\) in degrees:

The percent polarization (\(p\)) and its error are calculated as

The debiased polarization percentage (\(p'\))is also calculated, as:

Each of the \(\theta\), \(p\), and \(p'\) maps and their error images are stored as separate extensions in the output from this step, which is the final output from the pipeline for Nod-Pol data. This file will have 19 extensions, including the primary HDU, with extension names, types, and numbers as follows:

STOKES I: primary HDU, image, extension 0

ERROR I: image, extension 1

STOKES Q: image, extension 2

ERROR Q: image, extension 3

STOKES U: image, extension 4

ERROR U: image, extension 5

IMAGE MASK: image, extension 6

PERCENT POL: image, extension 7

DEBIASED PERCENT POL: image, extension 8

ERROR PERCENT POL: image, extension 9

POL ANGLE: image, extension 10

ROTATED POL ANGLE: image, extension 11

ERROR POL ANGLE: image, extension 12

POL FLUX: image, extension 13

ERROR POL FLUX: image, extension 14

DEBIASED POL FLUX: image, extension 15

MERGED DATA: table, extension 16

POL DATA: table, extension 17

FINAL POL DATA: table, extension 18

The final two extensions contain table representations of the polarization values for each pixel, as an alternate representation of the \(\theta\), \(p\), and \(p'\) maps. The FINAL POL DATA table (extension 18) is a subset of the POL DATA table (extension 17), with data quality cuts applied.

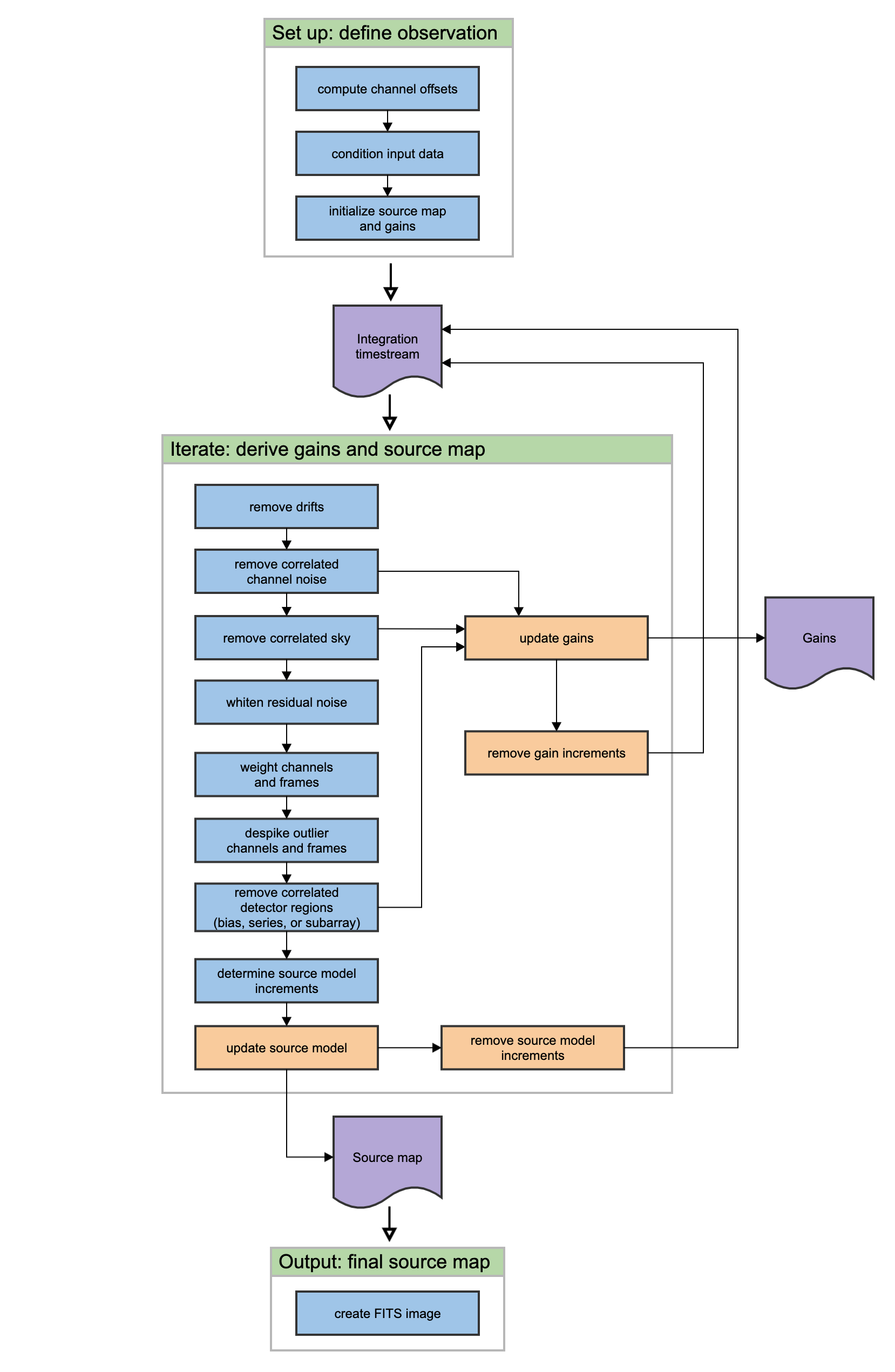

Scan Reduction Algorithms¶

This section covers the main algorithms used to reduce Scan mode data. See the flowchart in Fig. 104 for an overview of the iterative process. In this description, “channels” refer to detector pixels, and “frames” refer to time samples read out from the detector pixels during the scan observation.

Fig. 104 Scan data reduction flowchart¶

Signal Structure¶

Scan map reconstruction is based on the assumption that the measured data (\(X_{ct}\)) for detector \(c\), recorded at time \(t\), is the superposition of various signal components and essential (not necessarily white) noise \(n_{ct}\):

We can model the measured detector timestreams via a number of appropriate parameters, such as 1/f drifts (\(D_{ct}\)), \(n\) correlated noise components (\(C_{(1),t} ... C_{(n),t}\)) and channel responses to these (gains, \(g_{(1),c} ... g_{(n),c}\)), and the observed source structure (\(S_{xy}\)). We can derive statistically sound estimates (such as maximum-likelihood or robust estimates) for these parameters based on the measurements themselves. As long as our model is representative of the physical processes that generate the signals, and sufficiently complete, our derived parameters should be able to reproduce the measured data with the precision of the underlying limiting noise.

Below is a summary of the assumed principal model parameters, in general:

\(X_{ct}\): The raw timestream of channel c, measured at time t.

\(D_{ct}\): The 1/f drift value of channel c at time t.

\(g_{(1),c} ... g_{(n),c}\): Channel \(c\) gain (response) to correlated signals (for modes 1 through \(n\)).

\(C_{(1),t} ... C_{(n),t}\): Correlated signals (for modes 1 through \(n\)) at time \(t\).

\(G_c\): The point source gain of channel \(c\)

\(M_{ct}^{xy}\): Scanning pattern, mapping a sky position \(\{x,y\}\) into a sample of channel \(c\) at time \(t\).

\(S_{xy}\): Actual 2D source flux at position \(\{x,y\}\).

\(n_{ct}\): Essential limiting noise in channel c at time t.

Sequential Incremental Modeling and Iterations¶

The pipeline’s approach is to solve for each term separately, and sequentially, rather than trying to do a brute-force matrix inversion in a single step. Sequential modeling works on the assumption that each term can be considered independently from one another. To a large degree this is justified, as many of the signals produce more or less orthogonal imprints in the data (e.g. you cannot easily mistake correlated sky response seen by all channels with a per-channel DC offset). As such, from the point of view of each term, the other terms represent but an increased level of noise. As the terms all take turns in being estimated (usually from bright to faint) this model confusion “noise” goes away, especially with multiple iterations.

Even if the terms are not perfectly orthogonal to one another, and have degenerate flux components, the sequential approach handles this degeneracy naturally. Degenerate fluxes between a pair of terms will tend to end up in the term that is estimated first. Thus, the ordering of the estimation sequence provides a control on handling degeneracies in a simple and intuitive manner.

A practical trick for efficient implementation is to replace the raw timestream with the unmodeled residuals \(X_{ct} \rightarrow R_{ct}\) and let modeling steps produce incremental updates to the model parameters. Every time a model parameter is updated, its incremental imprint is removed from the residual timestream (a process we shall refer to as synchronization).

With each iteration, the incremental changes to the parameters become more insignificant, and the residual will approach the limiting noise of the measurement.

Initialization and Scan Validation¶

Prior to beginning iterative solution for the model components, the pipeline reads in the raw FITS table, assigns positional offsets to every detector channel, and sky coordinates to every time frame in the scan.

The input timestream is then checked for inconsistencies. For example, HAWC data is prone to discontinuous jumps in flux levels. The pipeline will search the timestream for flux jumps, and flag or fix jump-related artifacts as necessary. The pipeline also checks for gaps in the astrometry data in the timestream, gyro drifts over the course of the observation,

By default, the pipeline also clips extreme scanning velocities using, by default, a set minimum and maximum value for each instrument. The default settings still include a broad range of speeds, so images can sometimes be distorted by low or high speeds causing too little or too much exposure on single pixels. To fix this, the pipeline can optionally remove frames from the beginning or end of the observation, or sigma-clip the telescope speeds to a tighter range.

The size of the output source map is determined from the mapped area on the sky, and a configurable output pixel grid size. This map is updated on each iteration, with the derived source model.

Gains for all detector pixels are initialized with a reference gain map, derived from earlier observations. These initial gains serve as a starting place for the iterative model and allow for flagging and removal of channels known to be bad prior to iterating.

DC Offset and 1/f Drift Removal¶

For 1/f drifts, consider only the term:

where \(\delta D_{c\tau}\) is the 1/f channel drift value for \(t\) between \(\tau\) and \(\tau + T\), for a 1/f time window of \(T\) samples. That is, we simply assume that the residuals are dominated by an unmodeled 1/f drift increment \(\delta D_{c\tau}\). Note that detector DC offsets can be treated as a special case with \(\tau = 0\), and \(T\) equal to the number of detector samples in the analysis.

We can construct a \(\chi^2\) measure, as:

where \(w_{ct} = \sigma_{ct}^{-2}\) is the proper noise-weight associated with each datum. The pipeline furthermore assumes that the noise weight of every sample \(w_{ct}\) can be separated into the product of a channel weight \(w_c\) and a time weight \(w_t\), i.e. \(w_{ct} = w_c \cdot w_t\). This assumption is identical to that of separable noise (\(\sigma_{ct} = \sigma_c \cdot \sigma_t\)). Then, by setting the \(\chi^2\) minimizing condition \(\partial \chi^2 / \partial(\delta D_{ct}) = 0\), we arrive at the maximum-likelihood incremental update:

Note that each sample (\(R_{ct}\)) contributes a fraction:

to the estimate of the single parameter \(\delta D_{c\tau}\). In other words, this is how much that parameter is dependent on each data point. Above all, \(p_{ct}\) is a fair measure of the fractional degrees of freedom lost from each datum, due to modeling of the 1/f drifts. We will use this information later, when estimating proper noise weights.

Note, also, that we may replace the maximum-likelihood estimate for the drift parameter with any other statistically sound estimate (such as a weighted median), and it will not really change the dependence, as we are still measuring the same quantity, from the same data, as with the maximum-likelihood estimate. Therefore, the dependence calculation remains a valid and fair estimate of the degrees of freedom lost, regardless of what statistical estimator is used.

The removal of 1/f drifts must be mirrored in the correlated signals also if gain solutions are to be accurate.

Noise Weighting¶

Once we model out the dominant signal components, such that the residuals are starting to approach a reasonable level of noise, we can turn our attention to determining proper noise weights. In its simplest form, we can determine the weights based on the mean observed variance of the residuals, normalized by the remaining degrees of freedom in the data:

where \(N_{(t),c}\) is the number of unflagged data points (time samples) for channel \(c\), and \(P_c\) is the total number of parameters derived from channel \(c\). The scalar value \(\eta_c\) is the overall spectral filter pass correction for channel \(c\), which is 1 if the data was not spectrally filtered, and 0 if the data was maximally filtered (i.e. all information is removed). Thus typical \(\eta_c\) values will range between 0 and 1 for rejection filters, or can be greater than 1 for enhancing filters. We determine time-dependent weights as:

Similar to the above, here \(N_{(c),t}\) is the number of unflagged channel samples in frame \(t\), while \(P_t\) is the total number of parameters derived from frame \(t\). Once again, it is practical to enforce a normalizing condition of setting the mean time weight to unity, i.e. \(\mu(w_t) = 1\). This way, the channel weights \(w_c\) have natural physical weight units, corresponding to \(w_c = 1/\sigma_c^2\).

The total number of parameters derived from each channel, and frame, are simply the sum, over all model parameters \(m\), of all the parameter dependencies \(p_{ct}\) we calculated for them. That is,

and

Getting these lost-degrees-of-freedom measures right is critical for the stability of the solutions in an iterated framework. Even slight biases in \(p_{ct}\) can grow exponentially with iterations, leading to divergent solutions, which may manifest as over-flagging or as extreme mapping artifacts.

Despiking¶

After deriving fair noise weights, we can try to identify outliers in the data (glitches and spikes) and flag them for removal from further analysis. By default, the pipeline uses differential deviations between neighboring data points to identify outlier values.

Spectral Conditioning¶

Ideally, detectors would have featureless white noise spectra (at least after the 1/f noise is treated by the drift removal). In practice, that is rarely the case. Spectral features are bad because (a) they produce mapping features/artifacts (such as “striping”), and because (b) they introduce a covariant noise term between map points that is not easily represented by the output. It is therefore desirable to “whiten” the residual noise whenever possible, to mitigate both these effects.

Noise whitening starts with measuring the effective noise spectrum in a temporal window, significantly shorter than the integration on which it is measured. In the pipeline, the temporal window is designed to match the 1/f stability timescale \(T\) chosen for the drift removal, since the drift removal will wipe out all features on longer timescales. With the use of such a spectral window, we may derive a lower-resolution averaged power-spectrum for each channel. The pipeline then identifies the white noise level, either as the mean (RMS) scalar amplitude over a specified range of frequencies, or automatically, over an appropriate frequency range occupied by the point-source signal as a result of the scanning motion.

Then, the pipeline will look for significant outliers in each spectral bin, above a specified level (and optimally below a critical level too), and create a real-valued spectral filter profile \(\phi_{cf}\) for each channel \(c\) and frequency bin \(f\) to correct these deviations.

There are other filters that can be applied also, such as notch filters, or a motion filter to reject responses synchronous to the dominant telescope motion. In the end, every one of these filters is represented by an appropriate scalar filter profile \(\phi_{cf}\), so the discussion remains unchanged. Only the whitening filter is used by default for HAWC data.

Once a filter profile is determined, we apply the filter by first calculating a rejected signal:

where \(\hat{R}_{cf}\) is the Fourier transform of \(R_{ct}\), using the weighting function provided by \(w_t\), and \(F^{-1}\) denotes the inverse Fourier Transform from the spectral domain back into the timestream. The rejected signals are removed from the residuals as:

The overall filter pass \(\eta_c\) for channel \(c\), can be calculated as:

where \(N_f\) is the number of spectral bins in the profile \(\phi_{cf}\). The above is simply a measure of the white-noise power fraction retained by the filter, which according to Parseval’s theorem, is the same as the power fraction retained in the timestream, or the scaling of the observed noise variances as a result of filtering.

Map Making¶

The mapping algorithm for the output source model implements a nearest-pixel method, whereby each data point is mapped entirely into the map pixel that falls nearest to the given detector channel \(c\), at a given time \(t\). Here,

where \(M_{xy}^{ct}\) associates each sample \(\{c,t\}\) uniquely with a map pixel \(\{x,y\}\), and is effectively the transpose of the mapping function defined earlier. \(\varkappa_c\) is the point-source filtering (pass) fraction of the pipeline. It can be thought of as a single scalar version of the transfer function. Its purpose is to measure how isolated point-source peaks respond to the various reduction steps, and correct for it. When done correctly, point source peaks will always stay perfectly cross-calibrated between different reductions, regardless of what reduction steps were used in each case. More generally, a reasonable quality of cross-calibration (to within 10%) extends to compact and slightly extended sources (typically up to about half of the field-of-view (FoV) in size). While corrections for more extended structures (\(\geq\) FoV) are possible to a certain degree, they come at the price of steeply increasing noise at the larger scales.

The map-making algorithm should skip over any data that is unsuitable for quality map-making (such as too-fast scanning that may smear a source). For formal treatment, we assume that \(M_{ct}^{xy} = 0\) for any troublesome data.

Calculating the precise dependence of each map point \(S_{xy}\) on the timestream data \(R_{ct}\) is computationally costly to the extreme. Instead, the pipeline gets by with the approximation:

This approximation is good as long as most map points are covered with a representative collection of pixels, and as long as the pixel sensitivities are more or less uniformly distributed over the field of view.

We can also calculate the flux uncertainty in the map \(\sigma_{xy}\) at each point \(\{x,y\}\) as:

Source models are first derived from each input scan separately. These may be despiked and filtered, if necessary, before added to the global increment with an appropriate noise weight (based on the observed map noise) if source weighting is desired.

Once the global increment is complete, we can add it to the prior source model \(S_{xy}^{r(0)}\) and subject it to further conditioning, especially in the intermediate iterations. Conditioning operations may include smoothing, spatial filtering, redundancy flagging, noise or exposure clipping, signal-to-noise blanking, or explicit source masking. Once the model is processed into a finalized \(S_{xy}'\), we synchronize the incremental change \(\delta S_{xy}' = S_{xy}' - S_{xy}^{r(0)}\) to the residuals:

Note, again, that \(\delta S_{xy}' \neq \delta S_{xy}\). That is, the incremental change in the conditioned source model is not the same as the raw increment derived above. Also, since the source gains \(G_c\) may have changed since the last source model update, we must also re-synchronize the prior source model \(S_{xy}^{(0)}\) with the incremental source gain changes \(\delta G_c\) (first term inside the brackets).

The pipeline operates under the assumption that the point-source gains \(G_c\) of the detectors are closely related to the observed sky-noise gains \(g_c\) derived from the correlated noise for all channels. Specifically, it treats the point-source gains as the product:

where \(\varepsilon_c\) is the point-source coupling efficiency. It measures the ratio of point-source gains to sky-noise gains (or extended source gains). Generally, the pipeline will assume \(\varepsilon_c = 1\), unless these values are measured and loaded during the initial scan validation sequence.

Optionally, the pipeline can also derive \(\varepsilon_c\) from the observed response to a source structure, provided the scan pattern is sufficient to move significant source flux over all detectors. The source gains also include a correction for atmospheric attenuation, for an optical depth \(\tau\), in-band and in the line of sight.

Point-Source Flux Corrections¶

We mentioned point-source corrections in the section above; here, we explain how these are calculated. First, consider drift removal. Its effect on point source fluxes is a reduction by a factor:

In terms of the 1/f drift removal time constant \(T\) and the typical point-source crossing time \(\tau_{pnt}\). Clearly, the effect of 1/f drift removal is smaller the faster one scans across the source, and becomes negligible when \(\tau_{pnt} \ll T\).

The effect of correlated-noise removal, over some group of channels of mode \(i\), is a little more complex. It is calculated as:

where \(\Omega_{ck}\) is the overlap between channels \(c\) and \(k\). That is, \(\Omega_{ck}\) is the fraction of the point source peak measured by channel \(c\) when the source is centered on channel \(k\). \(N_{(i),t}\) is the number of correlated noise-samples that have been derived for the given mode (usually the same as the number of time samples in the analysis). The correlated model’s dependence on channel \(c\) is:

Finally, the point-source filter correction due to spectral filtering is calculated based on the average point-source spectrum produced by the scanning. Gaussian source profiles with spatial spread \(\sigma_x \approx FWHM / 2.35\) produce a typical temporal spread \(\sigma_t \approx \sigma_x / \bar{v}\), in terms of the mean scanning speed \(\bar{v}\). In frequency space, this translates to a Gaussian frequency spread of \(\sigma_f = (2 \pi \sigma_t)^{-1}\), and thus a point-source frequency profile of:

More generally, \(\Psi_f\) may be complex-valued (asymmetric beam). Accordingly, the point-source filter correction due to filtering with \(\phi_f\) is generally:

The compound point source filtering effect from \(m\) model components is the product of the individual model corrections, i.e.:

Scan Map Output¶

Since the Scan mode algorithms are iterative, there are no well-defined intermediate products that may be written to disk. For Scan mode data, the pipeline takes as input a set of raw Level 0 HAWC FITS files, described in the Prepare section, and writes as output a single FITS file per file group, saved with PRODTYPE = scanmap (file name code SMP). These files contain an image of the source map in units of detector counts, and several other extensions.

The flux calibrated map file is saved as the calibrate product type (CAL). The primary HDU in the CAL file contains the flux image in units of Jy/pixel. The first extension (EXTNAME = EXPOSURE) contains an image of the nominal exposure time in seconds at each point in the map. The second extension (EXTNAME = NOISE) holds the error image corresponding to the flux map, and the third extension (EXTNAME = S/N) is the signal-to-noise ratio of the flux to the error image. The fourth and further extensions contain binary tables of data, one for each input scan.

Scan-Pol Reduction Algorithms¶

Scanning polarimetry reductions are a hybrid of the the Nod-Pol and Scan reduction algorithms, described above.

Scan-Pol observations are performed in a sequence of four scans, where each scan has a different HWP position angle in the following sequence: 5 degrees, 50 degrees, 27.5 degrees, and 72.5 degrees. This sequence is called a ‘set’ hereafter. The pipeline sorts observations into sets and runs the scan map reconstruction algorithm on each set, following the procedure in Scan Reduction Algorithms. For Scan-Pol observations, the pipeline produces two images per scan per HWP angle associated with the R and T arrays. Thus, for a single set, 8 images are generated, one for each of R0 and T0 at each angle. The pipeline creates all maps in the same output coordinate system and pixel scale, so that they are all registered to each other.

Since the scan map step sets the background level independently for each scan image from the median of the full map, there may be inconsistencies in the zero-level between the images, if there is significant diffuse emission across the map. In this case, the pipeline may optionally correct the zero-level in each image by identifying a sky region with no emission, and subtracting the median level in this region from each image. The same region is used for each HWP angle and subarray, so that all images are set independently to a common zero level.

After zero-level correction, the R and T arrays are directly added and subtracted at each HWP angle, and combined as described above to generate Stokes I, Q, and U images (the Compute Stokes step). The output data format is the same as for the stokes product for the Nod-Pol pipeline.

After Stokes calculation, the following steps are also performed, in the way described above for the Nod-Pol pipeline:

Note that the scan map pipeline step performs opacity and background level corrections on individual scans and resamples data into sky coordinates with full WCS corrections, as part of its standard processing, so these steps from the Nod-Pol pipeline are not applied.

The final output product is a polarization map, the same as is produced by the Nod-Pol pipeline.

Other Resources¶

For more information on the code or algorithms used in the HAWC DRP pipeline, see the following documents:

Far-infrared polarimetry analysis: Hildebrand et. al. 2000 PASP, 112, 1215

DRP infrastructure and image viewer: Berthoud, M. 2013 ADASS XXII, 475, 193

The scan map reconstruction algorithms are based on a Java pipeline called CRUSH. For more information, see:

CRUSH paper: Kovács, A. 2008, Proc. SPIE, 7020, 45

CRUSH thesis: Kovács, A. 2006, PhD Thesis, Caltech

Online documentation: http://www.sigmyne.com/crush/

Data Products¶

File names¶

Output files from the HAWC pipeline are named according to the convention:

FILENAME = F[flight]_HA_[mode]_[aorid]_[spectel]_[type]_[fn1[-fn2]].fits

where flight is the SOFIA flight number, HA indicates the instrument (HAWC+), and mode is either IMA for imaging observations, POL for polarization observations, or CAL for diagnostic data. The aorid indicates the SOFIA program and observation number; spectel indicates the filter/band and the HWP setting. The type is a three-letter identifier for the pipeline product type, and fn1 and fn2 are the first and last raw file numbers that were combined to produce the output product. For example, a polarization map data product with AOR-ID 81_0131_04, derived from files 5 to 6 of flight 295, taken in Band A with HWP in the A position would have the filename F0295_HA_POL_81013104_HAWAHWPA_PMP_005-006.fits. See the tables below for a list of all possible values for the three-letter product type.

Data format¶

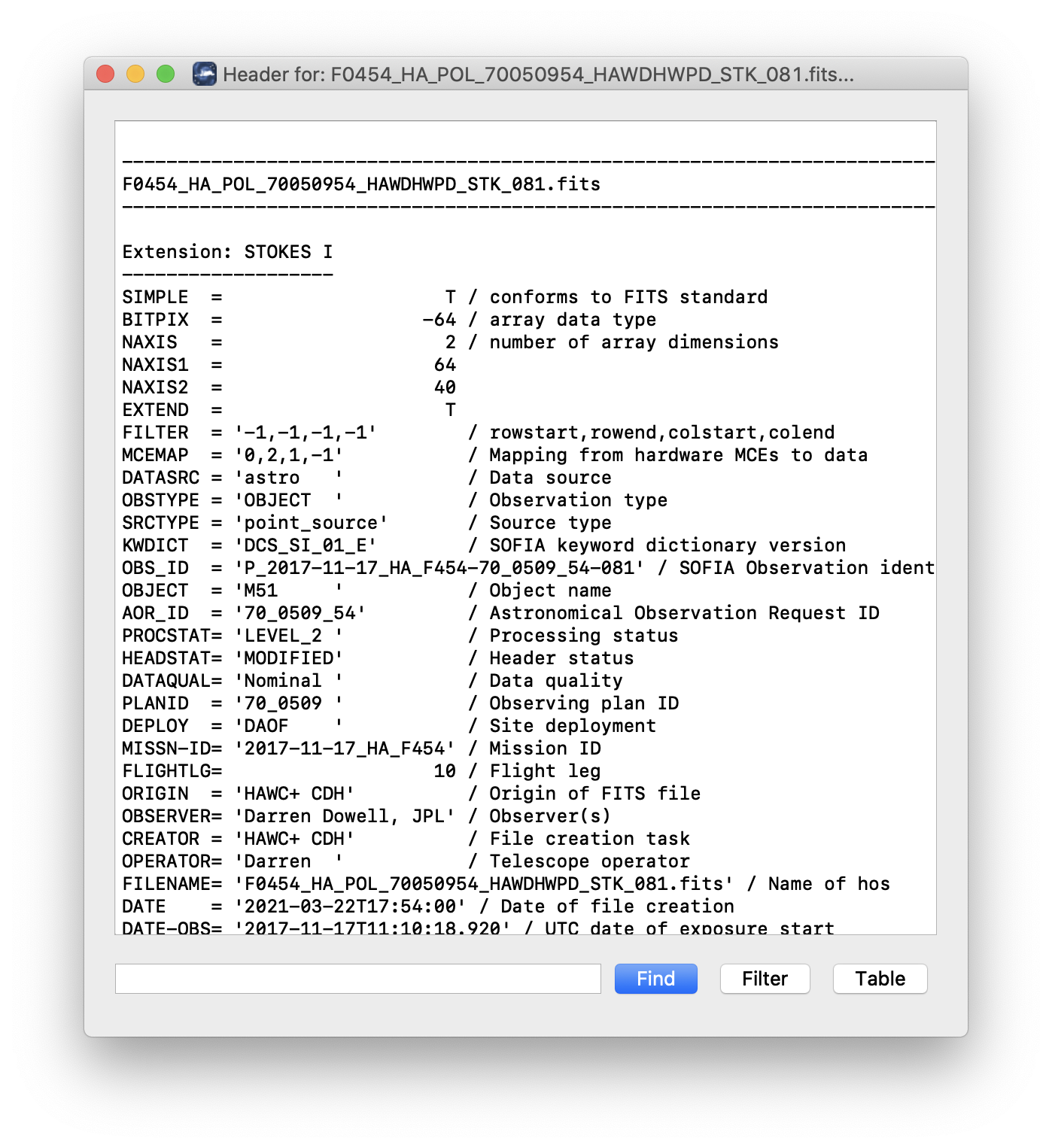

Most HAWC data is stored in FITS files, conforming to the FITS standard (Pence et al. 2010). Each FITS file contains a primary Header Data Unit (HDU) which may contain the most appropriate image data for that particular data reduction level. Most files have additional data stored in HDU image or table extensions. All keywords describing the file are in the header of the primary HDU. Each HDU also has a minimal header and is identified by the EXTNAME header keyword. The algorithm descriptions, above, give more information about the content of each extension.

Pipeline products¶

The following tables list all intermediate and final products that may be generated by the HAWC pipeline, in the order in which they are produced for each mode. The product type is stored in the primary header, under the keyword PRODTYPE. By default, for Nod-Pol mode, the demodulate, opacity, calibrate, merge, and polmap products are saved. For Chop-Nod mode, the demodulate, opacity, merge, and calibrate products are saved. For Scan mode, the scanmap and calibrate products are saved. For Scan-Pol mode, the scanmappol, calibrate, merge, and polmap products are saved.

For polarization data, the pipeline also generates two auxiliary products: a polarization map image in PNG format, with polarization vectors plotted over the Stokes I image, and a polarization vector file in DS9 region format, for displaying with FITS images. These products are alternate representations of the data in the FINAL POL DATA table in the polarization map (PMP) FITS file. Similarly, for imaging data, a PNG quick-look preview image is generated as a final step in the pipeline. These auxiliary products may be distrubuted to observers separately from the FITS file products.

Data products that contain multiple AORs or that contain observations from multiple flights are referred to as multi-mission products. When multi-mission data are processed and stored in the database, they replace the corresponding single-mission/single-AOR data files. This process usually results in fewer data files for a project. For HAWC+, the following data products can be multi-mission:

imaging: calibrate (CAL)

polarimetry: merge (MRG), polmap (PMP).

Step |

Description |

PRODTYPE |

PROCSTAT |

Identifier |

Saved |

|---|---|---|---|---|---|

Make Flat |

Flat generated from Int. Cal file |

obsflat |

LEVEL_2 |

OFT |

Y |

Demodulate |

Chops subtracted |

demodulate |

LEVEL_1 |

DMD |

Y |

Flat Correct |

Flat field correction applied |

flat |

LEVEL_2 |

FLA |

N |

Align Arrays |

R array shifted to T array |

shift |

LEVEL_2 |

SFT |

N |

Split Images |

Data split by nod, HWP |

split |

LEVEL_2 |

SPL |

N |

Combine Images |

Chop cycles combined |

combine |

LEVEL_2 |

CMB |

N |

Subtract Beams |

Nod beams subtracted |

nodpolsub |

LEVEL_2 |

NPS |

N |

Compute Stokes |

Stokes parameters calculated |

stokes |

LEVEL_2 |

STK |

N |

Update WCS |

WCS added to header |

wcs |

LEVEL_2 |

WCS |

N |

Subtract IP |

Instrumental polarization removed |

ip |

LEVEL_2 |

IPS |

N |

Rotate Coordinates |

Polarization angle corrected to sky |

rotate |

LEVEL_2 |

ROT |

N |

Correct Opacity |

Corrected for atmospheric opacity |

opacity |

LEVEL_2 |

OPC |

Y |

Calibrate Flux |

Flux calibrated to physical units |

calibrate |

LEVEL_3 |

CAL |

Y |

Subtract Background |

Residual background removed |

bgsubtract |

LEVEL_3 |

BGS |

N |

Bin Pixels |

Pixels rebinned to increase S/N |

binpixels |

LEVEL_3 |

BIN |

N |

Merge Images |

Dithers merged to a single map |

merge |

LEVEL_3 |

MRG |

Y |

Compute Vectors |

Polarization vectors calculated |

polmap |

LEVEL_4 |

PMP |

Y |

Step |

Description |

PRODTYPE |

PROCSTAT |

Identifier |

Saved |

|---|---|---|---|---|---|

Make Flat |

Flat generated from Int.Cal file |

obsflat |

LEVEL_2 |

OFT |

Y |

Demodulate |

Chops subtracted |

demodulate |

LEVEL_1 |

DMD |

Y |

Flat Correct |

Flat field correction applied |

flat |

LEVEL_2 |

FLA |

N |

Align Arrays |

R array shifted to T array |

shift |

LEVEL_2 |

SFT |

N |

Split Images |

Data split by nod, HWP |

split |

LEVEL_2 |

SPL |

N |

Combine Images |

Chop cycles combined |

combine |

LEVEL_2 |

CMB |

N |

Subtract Beams |

Nod beams subtracted |

nodpolsub |

LEVEL_2 |

NPS |

N |

Compute Stokes |

Stokes parameters calculated |

stokes |

LEVEL_2 |

STK |

N |

Update WCS |

WCS added to header |

wcs |

LEVEL_2 |

WCS |

N |

Correct Opacity |

Corrected for atmospheric opacity |

opacity |

LEVEL_2 |

OPC |

Y |

Subtract Background |

Residual background removed |

bgsubtract |

LEVEL_2 |

BGS |

N |

Bin Pixels |

Pixels rebinned to increase S/N |

binpixels |

LEVEL_2 |

BIN |

N |

Merge Images |

Dithers merged to single map |

merge |

LEVEL_2 |

MRG |

Y |

Calibrate Flux |

Flux calibrated to physical units |

calibrate |

LEVEL_3 |

CAL |

Y |

Step |

Description |

PRODTYPE |

PROCSTAT |

Identifier |

Saved |

|---|---|---|---|---|---|

Construct Scan Map |

Source model iteratively derived |

scanmap |

LEVEL_2 |

SMP |

Y |

Calibrate Flux |

Flux calibrated to physical units |

calibrate |

LEVEL_3 |

CAL |

Y |

Step |

Description |

PRODTYPE |

PROCSTAT |

Identifier |

Saved |

|---|---|---|---|---|---|

Construct Scan Map |

Source model iteratively derived |

scanmappol |

LEVEL_2 |

SMP |

Y |

Compute Stokes |

Stokes parameters calculated |

stokes |

LEVEL_2 |

STK |

N |

Subtract IP |

Instrumental polarization removed |

ip |

LEVEL_2 |

IPS |

N |

Rotate Coordinates |

Polarization angle corrected to sky |

rotate |

LEVEL_2 |

ROT |

N |

Calibrate Flux |

Flux calibrated to physical units |

calibrate |

LEVEL_3 |

CAL |

Y |

Merge Images |

HWP sets merged to single map |

merge |

LEVEL_3 |

MRG |

Y |

Compute Vectors |

Polarization vectors calculated |

polmap |

LEVEL_4 |

PMP |

Y |

Grouping Level 0 Data for Processing¶

In order for the pipeline to successfully reduce a group of HAWC+ data together, all input data must share a common instrument configuration and observation mode, as well as target and filter band and HWP setting. These requirements translate into a set of FITS header keywords that must match in order for a set of data to be grouped together. These keyword requirements are summarized in the table below, for imaging and polarimetry data.

Mode |

Keyword |

Data Type |

Match Criterion |

|---|---|---|---|

All |

OBSTYPE |

string |

exact |

All |

FILEGPID |

string |

exact |

All |

INSTCFG |

string |

exact |

All |

INSTMODE |

string |

exact |

All |

SPECTEL1 |

string |

exact |

All |

SPECTEL2 |

string |

exact |

All |

PLANID |

string |

exact |

All |

NHWP |

float |

exact |

Imaging only |

SCNPATT |

string |

exact |

Imaging only |

CALMODE |

string |

exact |

Configuration and Execution¶

Installation¶

The HAWC pipeline is written entirely in Python. The pipeline is platform independent and has been tested on Linux, Mac OS X, and Windows operating systems. Running the pipeline requires a minimum of 16GB RAM, or equivalent-sized swap file.

The pipeline is comprised of six modules within the sofia_redux package:

sofia_redux.instruments.hawc, sofia_redux.pipeline,

sofia_redux.calibration, sofia_redux.scan, sofia_redux.toolkit, and

sofia_redux.visualization.

The hawc module provides the data processing

algorithms specific to HAWC, with supporting libraries from the

calibration, scan, toolkit, and visualization

modules. The pipeline module provides interactive and batch interfaces

to the pipeline algorithms.

External Requirements¶

To run the pipeline for any mode from the Redux interface, Python 3.8 or higher is required, as well as the following packages: astropy, astroquery, bottleneck, configobj, cycler, dill, joblib, matplotlib, numba, numpy, pandas, photutils, scikit-learn, and scipy.

Some display functions for the graphical user interface (GUI) additionally

require the PyQt5, pyds9, and regions packages. All required

external packages are available to install via the pip or conda package

managers. See the Anaconda environment file

(environment.yml), or the pip requirements file (requirements.txt)

distributed with sofia_redux for up-to-date version requirements.

Running the pipeline’s interactive display tools also requires an installation of SAO DS9 for FITS image display. See http://ds9.si.edu/ for download and installation instructions. The ds9 executable must be available in the PATH environment variable for the pyds9 interface to be able to find and control it. Please note that pyds9 is not available on the Windows platform.

Source Code Installation¶

The source code for the HAWC pipeline maintained by the SOFIA Data Processing Systems (DPS) team can be obtained directly from the DPS, or from the external GitHub repository. This repository contains all needed configuration files, auxiliary files, and Python code to run the pipeline on HAWC data in any observation mode.

After obtaining the source code, install the pipeline with the command:

python setup.py install

from the top-level directory.

Alternately, a development installation may be performed from inside the directory with the command:

pip install -e .

After installation, the top-level pipeline interface commands should be available in the PATH. Typing:

redux

from the command line should launch the GUI interface, and:

redux_pipe -h

should display a brief help message for the command line interface.

Configuration¶

The DRP pipeline requires a valid and complete configuration file to run. Configuration files are written in plain text, in the INI format readable by the configobj Python library. These files are divided into sections, specified by brackets (e.g. [section]), each of which may contain keyword-value pairs or subsections (e.g. [[subsection]]). The HAWC configuration file must contain the following sections:

Data configuration, including specifications for input and output file names and formats, and specifications for metadata handling

Pipeline mode definitions for each supported instrument mode, including the FITS keywords that define the mode and the list of steps to run

Pipeline step parameter definitions (one section for each pipeline step defined)

The pipeline is usually run with a default configuration file (sofia_redux/instruments/hawc/data/config/pipeconf.cfg), which defines all standard reduction steps and default parameters. It may be overridden with date-specific default values, defined in (sofia_redux/instruments/hawc/data/config/date_overrides/), or with user-defined parameters. Override configuration files may contain any subset of the values in the full configuration file. See Appendix: Sample Configuration Files for examples of override configuration files as well as the full default file.

The scan map reconstruction algorithm, run as a single pipeline step for Scan and Scan-Pol mode data, also has its own separate set of configuration files. These files are stored in the scan module, in sofia_redux/scan/data/configurations. They are read from this sub-directory in the order specified below.

Upon launch, scan map step will invoke the default configuration files (default.cfg) in the following order:

Global defaults from sofia_redux/scan/data/config/default.cfg

Instrument overrides from sofia_redux/scan/data/config/hawc_plus/default.cfg

Any configuration file may invoke further (nested) configurations, which are located and loaded in the same order as above. For example, hawc_plus/default.cfg invokes sofia/default.cfg first, which contains settings for SOFIA instruments not specific to HAWC.

There are also modified configurations for “faint”, “deep”, or “extended” sources, when one of these flags is set while running the scan map step. For example, the faint mode reduction parses faint.cfg from the above locations, after default.cfg was parsed. Similarly, there are extended.cfg and deep.cfg files specifying modified configurations for extended and deep modes, and a scanpol.cfg file specifying configurations specifically for Scan-Pol mode.

See Appendix: Sample Configuration Files for a full listing of the default configuration for the scan map algorithm.

Input Data¶

The HAWC pipeline takes as input raw HAWC data files, which contain binary tables of instrument readouts and metadata. The FITS headers contain data acquisition and observation parameters and, combined with the pipeline configuration files and other auxiliary files on disk, comprise the information necessary to complete all steps of the data reduction process. Some critical keywords are required to be present in the raw data in order to perform a successful grouping, reduction, and ingestion into the SOFIA archive. These are defined in the DRP pipeline in a configuration file that describes the allowed values for each keyword, in INI format (see Appendix: Required Header Keywords).

It is assumed that the input data have been successfully grouped before beginning reduction. The pipeline considers all input files in a reduction to be science files that are part of a single homogeneous reduction group, to be reduced together with the same parameters. The sole exception is that internal calibrator files (CALMODE=INT_CAL) may be loaded with their corresponding Chop-Nod or Nod-Pol science files. They will be reduced separately first, in order to produce flat fields used in the science reduction.

Auxiliary Files¶

In order to complete a standard reduction, the pipeline requires a number of files to be on disk, with locations specified in the DRP configuration file. Current default files described in the default configuration are stored along with the code, typically in the sofia_redux/instruments/hawc/data directory. See below for a table of all commonly used types of auxiliary files.

Auxiliary File |

File Type |

Pipe Step |

Comments |

|---|---|---|---|

Jump Map |

FITS |

Flux Jump |

Contains jump correction values per pixel |

Phase |

FITS |

Demodulate |

Contains phase delay in seconds for each pixel |

Reference Phase |

FITS |

Demod. Plots |

Contains reference phase angles for comparison with the current observation |

Sky Cal |

FITS |

Make Flat |

Contains a master sky flat for use in generating flats from INT_CALs |

Flat |

FITS |

Flat Correct |

Contains a back-up flat field, used if INT_CAL files are not available |

IP |

FITS |

Instrumental Polarization |

Contains q and u correction factors by pixel and band |

The jump map is used in a preparatory step before the pipeline begins processing to correct raw values for a residual electronic effect that results in discontinuous jumps in flux values. It is a FITS image that matches the dimensions of the raw flux values (128 x 41 pixels). Pixels for which flux jump corrections may be required have integer values greater than zero. Pixels for which there are no corrections necessary are zero-valued.

The phase files used in the Demodulate step should be in FITS format, with two HDUs containing phase information for the R and T arrays, respectively. The phases are stored as images that specify the timing delay, in seconds, for each pixel. The reference phase file used in the Demod Plots step is used for diagnostic purposes only: it specifies a baseline set of phase angle values, for use in judging the quality of internal calibrator files.

Normally, the pipeline generates the flat fields used in the Flat Correct step from internal calibrator (INT_CAL) files taken alongside the data. To do so, the Make Flats step uses a Sky Cal reference file, which has four image extensions: R Array Gain, T Array Gain, R Bad Pixel Mask, and T Bad Pixel Mask. The image in each extension should match the dimensions of the R and T arrays in the demodulated data (64 x 41 pixels). The Gain images should contain multiplicative floating-point flat correction factors. The Bad Pixel Mask images should be integer arrays, with value 0 (good), 1 (bad in R array), or 2 (bad in T array). Bad pixels, corresponding to those marked 1 or 2 in the mask extensions, should be set to NaN in the flat images. At a minimum, the primary FITS header for the flat file should contain the SPECTEL1 and SPECTEL2 keywords, for matching the flat filter to the input demodulated files.

When INT_CAL files are not available, the Flat Correct step may use a back-up flat file. This file should have the same format as the Sky Cal file, but the R Array Gain and T Array Gain values should be suitable for direct multiplication with the flux values in the Flat Correct step. There should be one back-up flat file available for each filter passband.

In addition to these files, stored with the DRP code, the pipeline requires several additional auxiliary files to perform flux calibration. These are tracked in the pipecal package, used to support SOFIA flux calibration for several instruments, including HAWC. The required files include response coefficient tables, used to correct for atmospheric opacity, and reference calibration factor tables, used to calibrate to physical units.

The instrumental response coefficients are stored in ASCII text files, with at least four white-space delimited columns as follows: filter wavelength, filter name, response reference value, and fit coefficient constant term. Any remaining columns are further polynomial terms in the response fit. The independent variable in the polynomial fit is indicated by the response filename: if it contains airmass, the independent variable is zenith angle (ZA); if alt, the independent variable is altitude in thousands of feet; if pwv, the independent variable is precipitable water vapor, in \(\mu m\). The reference values for altitude, ZA, and PWV are listed in the headers of the text files, in comment lines preceded with #.

Calibration factors are also stored in ASCII format, and list the correction factor by mode and HAWC filter band, to be applied to opacity-corrected data.

Some additional auxiliary files are used in reductions of flux standards, to assist in deriving the flux calibration factors applied to science observations. These include filter definition tables and standard flux tables, by date and source.

Auxiliary File |

File Type |

Pipe Step |

Comments |

|---|---|---|---|

Response |

ASCII |

Opacity Correct |

Contains instrumental response coefficients by altitude, ZA |

Calibration Factor |

ASCII |

Calibrate |

Contains reference calibration factors by filter band, mode |

Filter definition |

ASCII |

Standard Photometry |

Contains filter wavelength band and standard aperture definitions |

Standard flux |

ASCII |

Standard Photometry |

Contains reference flux values for a known source, by filter band |

Automatic Mode Execution¶

The DPS pipeline infrastructure runs a pipeline on previously-defined reduction groups as a fully-automatic black box. To do so, it creates an input manifest (infiles.txt) that contains relative paths to the input files (one per line). The command-line interface to the pipeline is run as:

redux_pipe infiles.txt

The command-line interface will read in the specified input files, use their headers to determine the observation mode, and accordingly the steps to run and any intermediate files to save. Output files are written to the current directory, from which the pipeline was called. After reduction is complete, the script will generate an output manifest (outfiles.txt) containing the relative paths to all output FITS files generated by the pipeline.

Optionally, in place of a manifest file, file paths to input files may be directly specified on the command line. Input files may be raw FITS files, or may be intermediate products previously produced by the pipeline. For example, this command will complete the reduction for a set of FITS files in the current directory, previously reduced through the calibration step of the pipeline:

redux_pipe *CAL*.fits

To customize batch reductions from the command line, the redux_pipe interface accepts a configuration file on the command line. This file may contain any subset of the full configuration file, specifying any non-default parameters for pipeline steps. An output directory for pipeline products and the terminal log level may also be set on the command line.

The full set of optional command-line parameters accepted by the redux_pipe interface are:

-h, --help show this help message and exit

-c CONFIG, --configuration CONFIG

Path to Redux configuration file.

-o OUTDIR, --out OUTDIR

Path to output directory.

-l LOGLEVEL, --loglevel LOGLEVEL

Log level.

Manual Mode Execution¶

In manual mode, the pipeline may be run interactively, via a graphical user interface (GUI) provided by the Redux package. The GUI is launched by the command:

redux

entered at the terminal prompt (Fig. 105). The GUI allows output directory specification, but it may write initial or temporary files to the current directory, so it is recommended to start the interface from a location to which the user has write privileges.

From the command line, the redux interface accepts an optional config file (-c) or log level specification (-l), in the same way the redux_pipe command does. Any pipeline parameters provided to the interface in a configuration file will be used to set default values; they will still be editable from the GUI.

Fig. 105 Redux GUI startup.¶

Basic Workflow¶

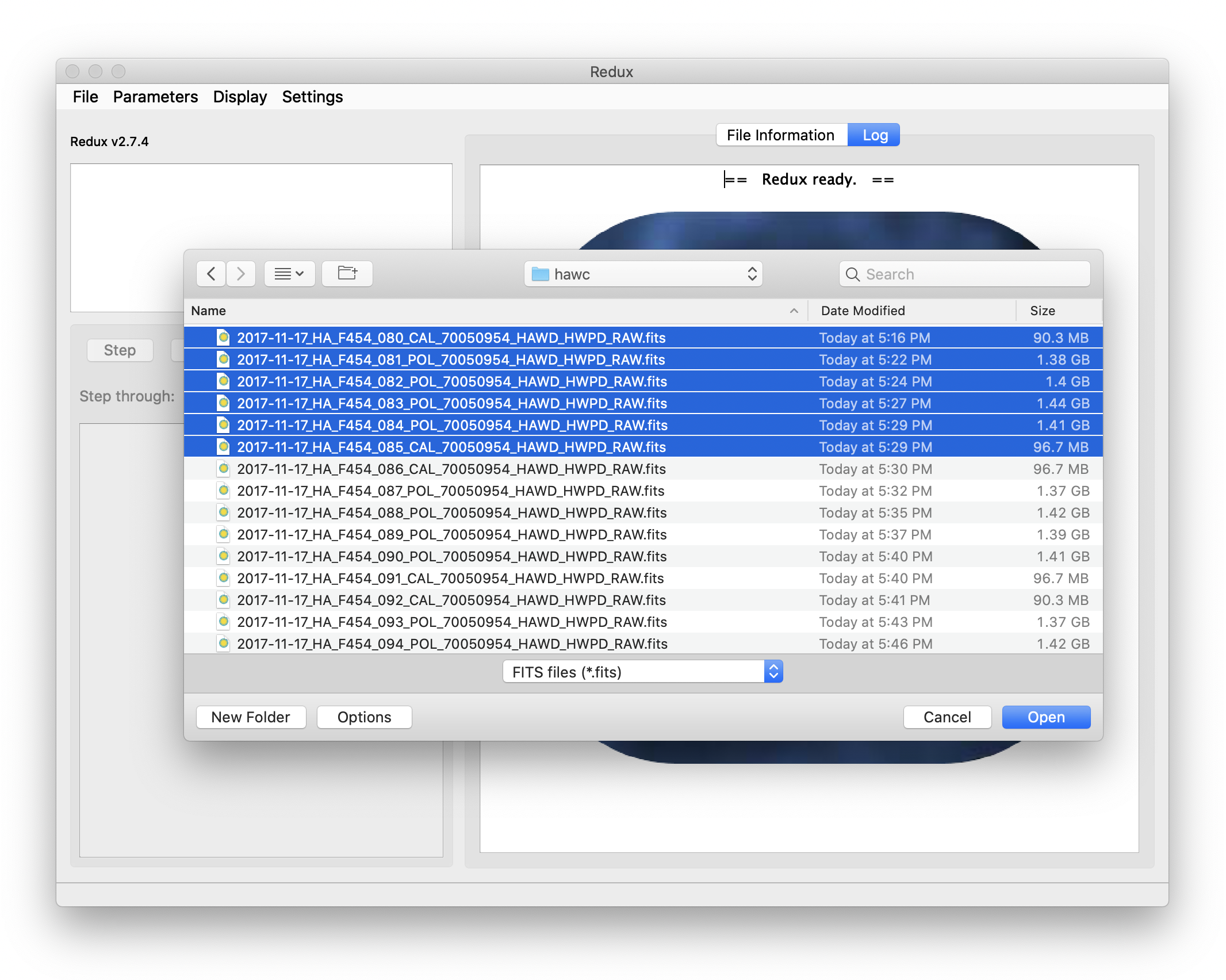

To start an interactive reduction, select a set of input files, using the File menu (File->Open New Reduction). This will bring up a file dialog window (see Fig. 106). All files selected will be reduced together as a single reduction set.

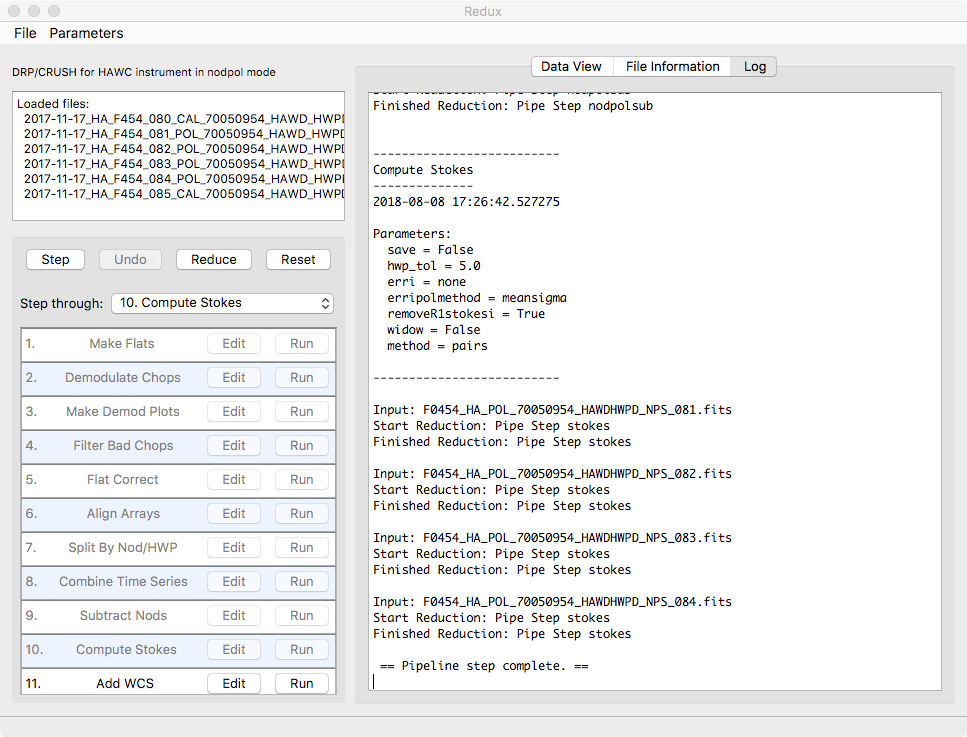

Redux will decide the appropriate reduction steps from the input files, and load them into the GUI, as in Fig. 107.

Fig. 106 Open new reduction.¶

Fig. 107 Sample reduction steps. Log output from the pipeline is displayed in the Log tab.¶

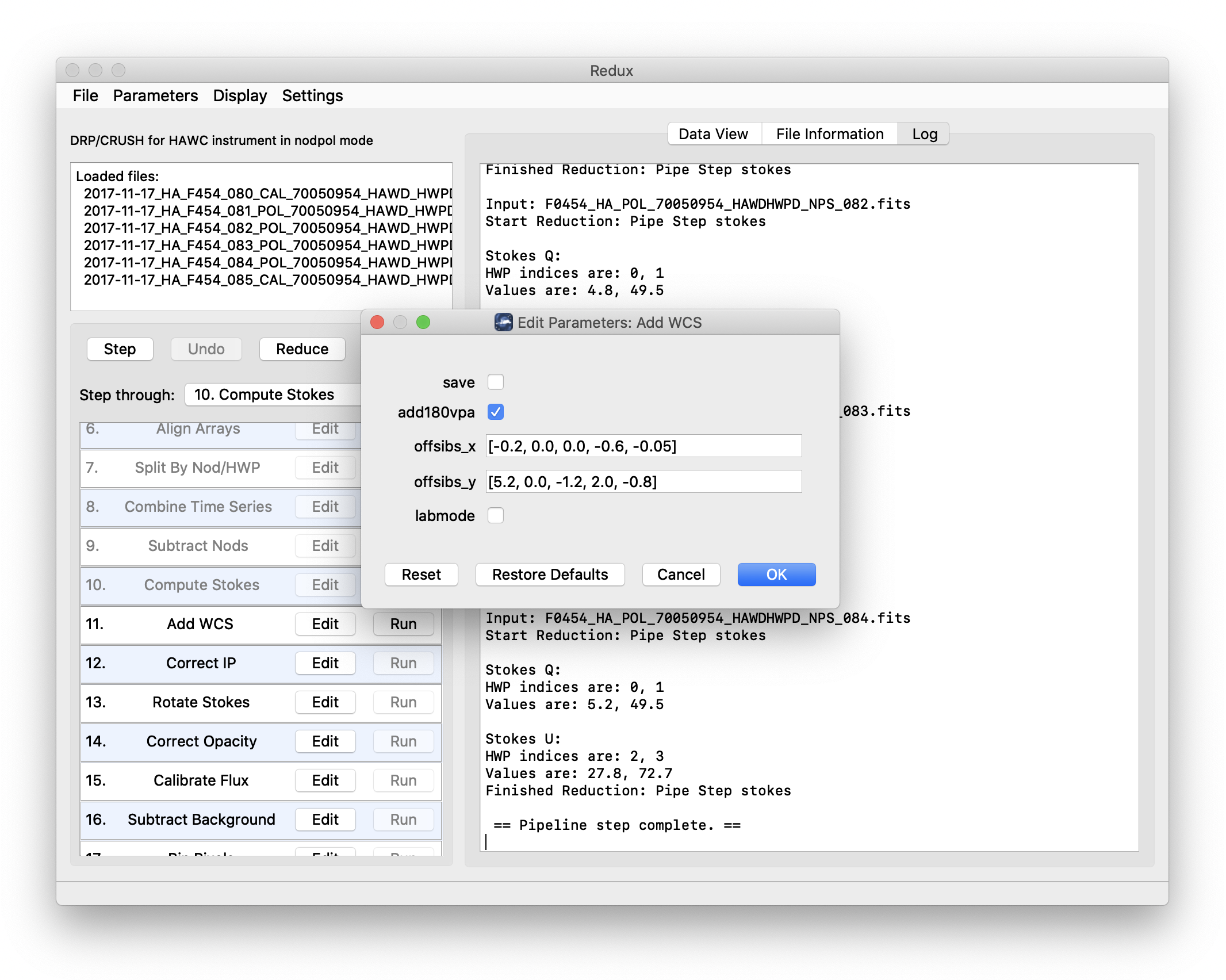

Each reduction step has a number of parameters that can be edited before running the step. To examine or edit these parameters, click the Edit button next to the step name to bring up the parameter editor for that step (Fig. 108). Within the parameter editor, all values may be edited. Click OK to save the edited values and close the window. Click Reset to restore any edited values to their last saved values. Click Restore Defaults to reset all values to their stored defaults. Click Cancel to discard all changes to the parameters and close the editor window.

Fig. 108 Sample parameter editor for a pipeline step.¶

The current set of parameters can be displayed, saved to a file, or reset all at once using the Parameters menu. A previously saved set of parameters can also be restored for use with the current reduction (Parameters -> Load Parameters).

After all parameters for a step have been examined and set to the user’s satisfaction, a processing step can be run on all loaded files either by clicking Step, or the Run button next to the step name. Each processing step must be run in order, but if a processing step is selected in the Step through: widget, then clicking Step will treat all steps up through the selected step as a single step and run them all at once. When a step has been completed, its buttons will be grayed out and inaccessible. It is possible to undo one previous step by clicking Undo. All remaining steps can be run at once by clicking Reduce. After each step, the results of the processing may be displayed in a data viewer. After running a pipeline step or reduction, click Reset to restore the reduction to the initial state, without resetting parameter values.

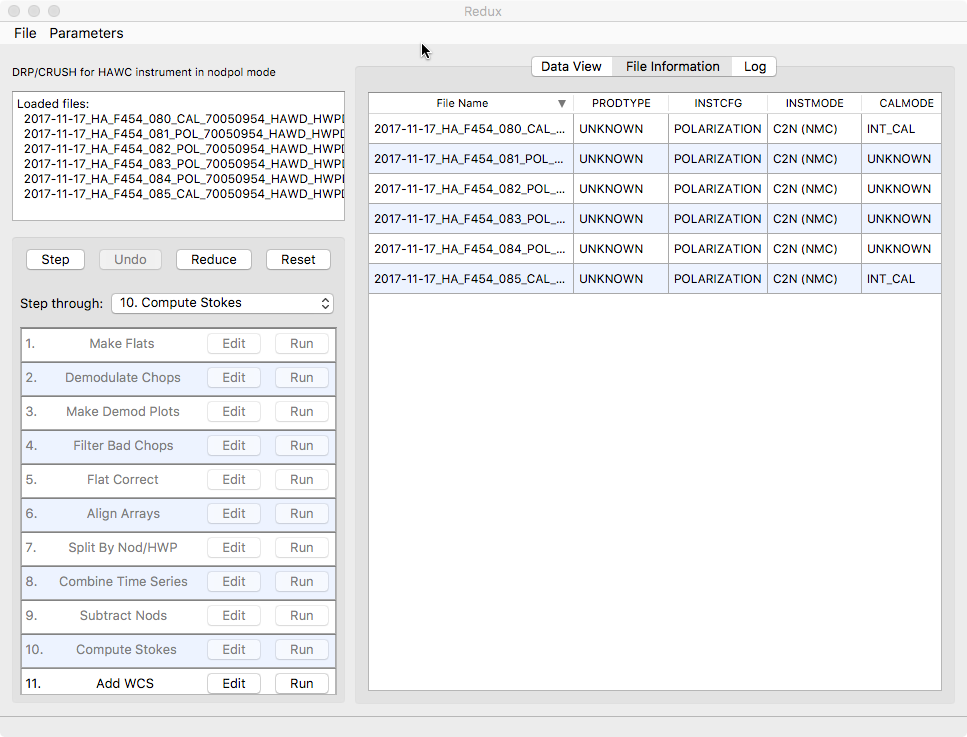

Files can be added to the reduction set (File -> Add Files) or removed from the reduction set (File -> Remove Files), but either action will reset the reduction for all loaded files. Select the File Information tab to display a table of information about the currently loaded files (Fig. 109).

Fig. 109 File information table.¶

Display Features¶

The Redux GUI displays images for quality analysis and display (QAD) in the DS9 FITS viewer. DS9 is a standalone image display tool with an extensive feature set. See the SAO DS9 site (http://ds9.si.edu/) for more usage information.

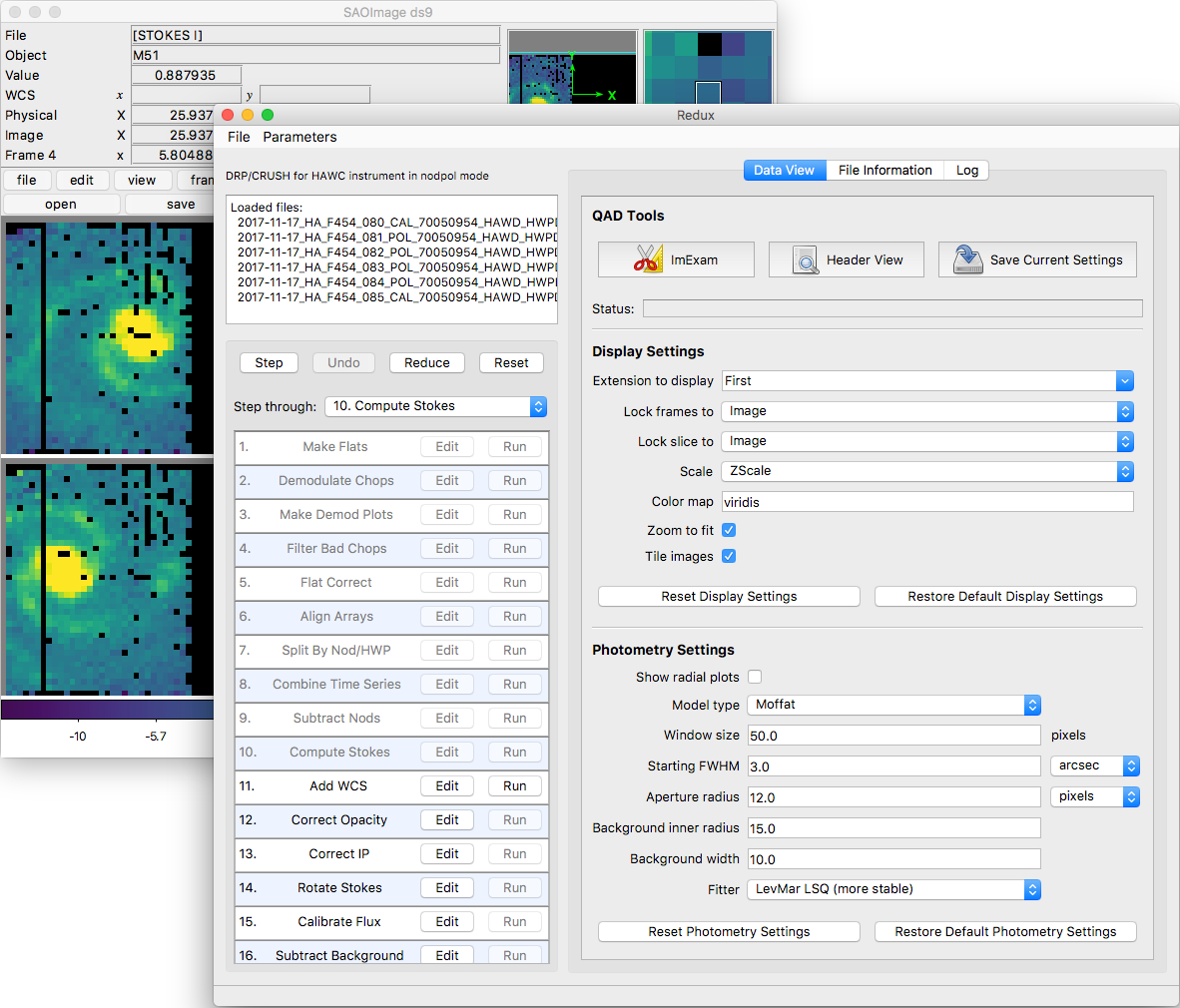

After each pipeline step completes, Redux may load the produced images into DS9. Some display options may be customized directly in DS9; some commonly used options are accessible from the Redux interface, in the Data View tab (Fig. 110).

Fig. 110 Data viewer settings and tools.¶

From the Redux interface, the Display Settings can be used to:

Set the FITS extension to display (First, or edit to enter a specific extension), or specify that all extensions should be displayed in a cube or in separate frames.

Lock individual frames together, in image or WCS coordinates.

Lock cube slices for separate frames together, in image or WCS coordinates.

Set the image scaling scheme.

Set a default color map.

Zoom to fit image after loading.

Tile image frames, rather than displaying a single frame at a time.

Changing any of these options in the Data View tab will cause the currently displayed data to be reloaded, with the new options. Clicking Reset Display Settings will revert any edited options to the last saved values. Clicking Restore Default Display Settings will revert all options to their default values.

In the QAD Tools section of the Data View tab, there are several additional tools available.